Using AI To Pilot A Racing Car With The Voice

Using voice control for a racing game might seem like a quirky idea, but it's also a fantastic way to explore the potential of the Web Speech API.

The Idea

Together with Florian and Guillaume, we came up with the idea for a racing game where you control the car using your voice. Instead of basic commands like "accelerate" or "brake," the concept was to mimic the sound of a car engine, using the pitch of your voice to control the car's speed. The higher the pitch, the faster the car would go.

Here is a video of François, our manager, testing the prototype:

It's less spectacular than the screenshot at the top of that article, but you can already see the huge potential of that idea! Plus you can play it for free at https://marmelab.com/voiracing/.

To detect the pitch of the voice, I used tensorflow.js and trained the model using teachable machine.

Web Speech API

In the early prototypes, I tried to use the Web Speech API to detect the pitch of the voice. However, it wasn't effective. Pitch detection is a highly complex process that requires an understanding of the human voice and sound.

I tried to use it but couldn't achieve satisfactory results. So, I decided to use deep learning instead. But training a deep learning model is not an easy task, is it?

Teachable Machine

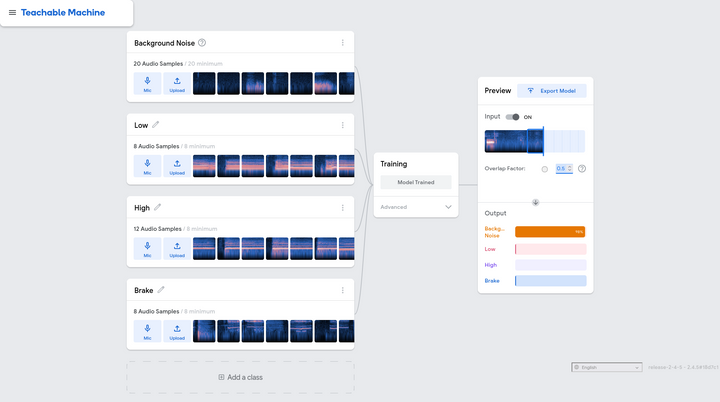

It turns out that it's quite easy to train an audio model in a few minutes, thanks to teachable machine.

Teachable Machine is an online tool from Google that lets you train a model based on audio samples. It's an excellent resource for quickly training a model with just a few clicks, and it's free to use - you don't even need to register.

In the Teachable Machine web UI, I created a new project and recorded a few samples of my voice imitating an engine:

- accelerating at full speed ("aaaAAAAAA") with the "High" label,

- maintaining speed with ("mmmmmmmmmmmm") the "Low" label, and

- braking ("iiiiiiiiii") with the "Brake" label.

I also recorded a few samples of noises to train the model to ignore them. I recorded about 10 samples for each action. Then I clicked the "train" button, and after a few minutes, I had a model ready to be downloaded. I used this model in my prototype.

Tensorflow.js

Next, I needed to create the application. I used a simple JavaScript file without React or any other framework to keep things straightforward. I just employed Vite to bundle the code. Starting from the Teachable Machine example, I modified some parts to make it work with my model.

const URL = window.location.origin + '/voiracing/model/';

async function createModel() {

const checkpointURL = URL + 'model.json'; // model topology

const metadataURL = URL + 'metadata.json'; // model metadata

const recognizer = speechCommands.create(

'BROWSER_FFT', // fourier transform type, not useful to change

undefined, // speech commands vocabulary feature, not useful for your models

checkpointURL,

metadataURL,

);

// check that model and metadata are loaded via HTTPS requests.

await recognizer.ensureModelLoaded();

return recognizer;

}

async function init() {

const recognizer = await createModel();

const classLabels = recognizer.wordLabels(); // get class labels

const labelContainer = document.getElementById('app')!;

for (let i = 0; i < classLabels.length; i++) {

labelContainer.appendChild(document.createElement('div'));

}

labelContainer.appendChild(document.createElement('div'));

// listen() takes two arguments:

// 1. A callback function that is invoked anytime a word is recognized.

// 2. A configuration object with adjustable fields

recognizer.listen(

async result => {

let action = 'break';

let max = 0;

for (let i = 0; i < classLabels.length; i++) {

const score = result.scores[i] as number;

const classPrediction =

classLabels[i] + ': ' + score.toFixed(2);

labelContainer.children[i]!.innerHTML = classPrediction;

if (score > max) {

max = score;

action = classLabels[i];

}

}

switch (action) {

case 'High':

accelerate();

break;

case 'Low':

maintainSpeed();

break;

case 'break':

brake();

break;

case 'Background Noise':

decelerate();

break;

}

labelContainer.children[classLabels.length]!.innerHTML =

'Speed: ' + getCurrentSpeed();

update(getCurrentSpeed());

},

{

includeSpectrogram: false, // in case listen should return result.spectrogram

probabilityThreshold: 0, // 0 is used to make it as smooth as possible, we want to detect idle as well

invokeCallbackOnNoiseAndUnknown: true,

overlapFactor: 0.75, // probably want between 0.5 and 0.75. More info in README

},

);

// Stop the recognition in 5 seconds.

// setTimeout(() => recognizer.stopListening(), 5000);

}

init();This is nearly the entire code. I just added a few functions to control the car's speed and a gauge to display it.

Note: I set the

probabilityThresholdto 0 to make it as smooth as possible. I also want to detect an idle state, which causes the car's speed to decrease.I also enabled

invokeCallbackOnNoiseAndUnknownto detect background noise, as it's considered idle by the model.

Using Tensorflow.js and Teachable Machine turned out to be easier than I expected. I managed to develop a working prototype in just a few hours.

Conclusion

I had a great time working with Tensorflow.js and Teachable Machine. They are fantastic tools for quickly training a model and incorporating it into a web application. I will definitely use them again in the future.

At the time of writing this blog post, the game isn't complete, but I have a functional prototype. While I've only implemented pitch detection so far, I plan to add more features later. I also want to try using these tools to train a model to detect head direction. I'll write a blog post about it once I have something functional.

The game's source code is available on GitHub at marmelab/voiracing.