Automating Accessibility and Performance Testing with Puppeteer and AxeCore

In our previous article on accessibility, we used Selenium and axe-core to validate that our pages were following the best practices. However, as we pointed in the conclusion, making a site accessible should not just mean to make it usable by people with disabilities. Many people do not have the latest gear with fast CPUs. Some don’t have a high bandwidth. Making a site accessible also means ensuring it can load fast enough to be usable by the widest range of people.

To ensure a site is accessible (performance included), you’d better add a check for accessibility and performance metrics in your continuous integration process. Selenium won’t help here but Puppeteer, a Node.js library providing access to a headless Chrome or Chromium over the DevTool protocol, might very well do. Let’s see how.

The Goal: Accessibility Tests in CI

I’m going to setup automatic end to end tests in a React application created with create-react-app. These tests will be executed by a Continuous Integration (CI) server - in my case, Travis.

At marmelab, we believe end to end tests should run on a production-like version of the application. This mean we’ll have to build the CRA application using its dedicated command, yarn build.

The Tools: Jest, Puppeteer and Axe

Last year, at the DotJs 2017 conference, Trent Willis presented The Future of Web Testing and showed us how we could use the devtool protocol to have access to everything we can see in the Chrome devtool, including performance metrics.

Armed with this knowledge, we’re going to setup our project with those tools:

- jest as the test runner

- puppeteer to access our pages and collect our metrics

- axe-core, an accessibility engine for automated Web UI testing.

The Process: Test Setup

We begin by initializing our app and installing our dependencies:

yarn create react-app accessibilitycd accessibilityyarn add -D axe-core jest-puppeteer puppeteer babel-preset-env serveWe’ll be using jest-puppeteer to configure puppeteer automatically for us. This package can also take care of starting the server before running the tests! However, it requires the next version of react-script.

{ "dependencies": { "react": "^16.4.1", "react-dom": "^16.4.1", "react-scripts": "next" }}We add the following script in the package.json to run the tests:

{ "scripts": { "test:accessibility": "JEST_PUPPETEER_CONFIG=./accessibility/jest-puppeteer.config.js jest --runInBand --verbose --colors --config ./accessibility/jest.config.json" }}Now, we need to configure jest correctly through the ./accessibility/jest-puppeteer.config.js (used by the test:accessibility script):

{ "preset": "jest-puppeteer", "testRegex": "/.*.(test|spec)\\.js"}Let’s add a custom ./accessibility/.babelrc file for jest to pick up, so that it allows us to write the tests in es6:

{ "presets": ["env"]}Almost there, jest-puppeteer needs to know how to start the server:

// in ./accessibility/jest-puppeteer.config.jsmodule.exports = { server: { command: "./node_modules/.bin/serve ./build", port: 5000, // jest-puppeteer waits until this port respond before starting the tests. 5000 is the default port of serve usedPortAction: "error", // If the port is used, stop everything launchTimeout: 5000, // Wait 5 secs max before timing out },};Finally, as we love makefile at Marmelab, so we also add one:

.PHONY: default install help start build test test-accessibility

.DEFAULT_GOAL := help

install: ## Install the dependencies yarn

help: @grep -E '^[a-zA-Z_-]+:.*?## .*$$' $(MAKEFILE_LIST) | sort | awk 'BEGIN {FS = ":.*?## "}; {printf "\033[36m%-30s\033[0m %s\n", $$1, $$2}'

start: ## Run the app in dev mode yarn start

build: ## Build the app in production mode yarn build

test-unit: ## Run the unit tests yarn test

test-accessibility: ## Run the accessibility tests @if [ "$(build)" != "false" ]; then \ echo 'Building application (call "make build=false test-accessibility" to skip the build)...'; \ ${MAKE} build; \ fi yarn test:accessibility

test: test-unit test-accessibililty ## Run all testsAll good, we’re ready to write tests!

Accessibility Tests With Axe

We used the code from the last article in some projects, and we refined it along the way, mostly by improving the error messages. Besides, there are no axe-puppeteer library yet so we had to find a way to run axe in the pages we wanted to test. Here is the new version of the axe utility functions we now use with puppeteer:

// in ./accessibility/accessibility.jsconst axe = require("axe-core");const { printReceived } = require("jest-matcher-utils");const { resolve } = require("path");const { realpathSync, mkdirSync, existsSync } = require("fs");

const PATH_TO_AXE = "./node_modules/axe-core/axe.min.js";const appDirectory = realpathSync(process.cwd());

const resolvePath = (relativePath) => resolve(appDirectory, relativePath);

exports.analyzeAccessibility = async (page, screenshotPath, options = {}) => { // Inject the axe script in our page await page.addScriptTag({ path: resolvePath(PATH_TO_AXE) }); // we make sure that axe is executed in the next tick after // the page emits the load event, giving priority for the // original JS to be evaluated const accessibilityReport = await page.evaluate((axeOptions) => { return new Promise((resolve) => { setTimeout(resolve, 0); }).then(() => axe.run(axeOptions)); }, options);

if ( screenshotPath && (accessibilityReport.violations.length || accessibilityReport.incomplete.length) ) { const path = `${process.cwd()}/screenshots`; if (!existsSync(path)) { mkdirSync(path); } await page.screenshot({ path: `${path}/${screenshotPath}`, fullPage: true, }); }

return accessibilityReport;};

const defaultOptions = { violationsThreshold: 0, incompleteThreshold: 0,};

const printInvalidNode = (node) => `- ${printReceived(node.html)}\n\t${node.any.map((check) => check.message).join("\n\t")}`;

const printInvalidRule = (rule) => `${printReceived(rule.help)} on ${rule.nodes.length} nodes\r\n${rule.nodes.map(printInvalidNode).join("\n")}`;

// Add a new method to expect assertions with a very detailed error reportexpect.extend({ toHaveNoAccessibilityIssues(accessibilityReport, options) { let violations = []; let incomplete = []; const finalOptions = Object.assign({}, defaultOptions, options);

if ( accessibilityReport.violations.length > finalOptions.violationsThreshold ) { violations = [ `Expected to have no more than ${finalOptions.violationsThreshold} violations. Detected ${accessibilityReport.violations.length} violations:\n`, ].concat(accessibilityReport.violations.map(printInvalidRule)); }

if ( finalOptions.incompleteThreshold !== false && accessibilityReport.incomplete.length > finalOptions.incompleteThreshold ) { incomplete = [ `Expected to have no more than ${finalOptions.incompleteThreshold} incomplete. Detected ${accessibilityReport.incomplete.length} incomplete:\n`, ].concat(accessibilityReport.incomplete.map(printInvalidRule)); }

const message = [].concat(violations, incomplete).join("\n"); const pass = accessibilityReport.violations.length <= finalOptions.violationsThreshold && (finalOptions.incompleteThreshold === false || accessibilityReport.incomplete.length <= finalOptions.incompleteThreshold);

return { pass, message: () => message, }; },});And we’re ready to test the first page!

// in ./accessibililty/home.test.jsimport { analyzeAccessibility } from "./accessibility";

describe("Home page", () => { beforeAll(async () => { await page.setViewport({ width: 1280, height: 1024 }); });

it("should not have accessibility issues", async () => { // We don't put this line in the beforeAll handler as our performance tests will need further configuration await page.goto("http://localhost:5000", { waitUntil: "load" }); const accessibilityReport = await analyzeAccessibility( page, `home.accessibility.png`, );

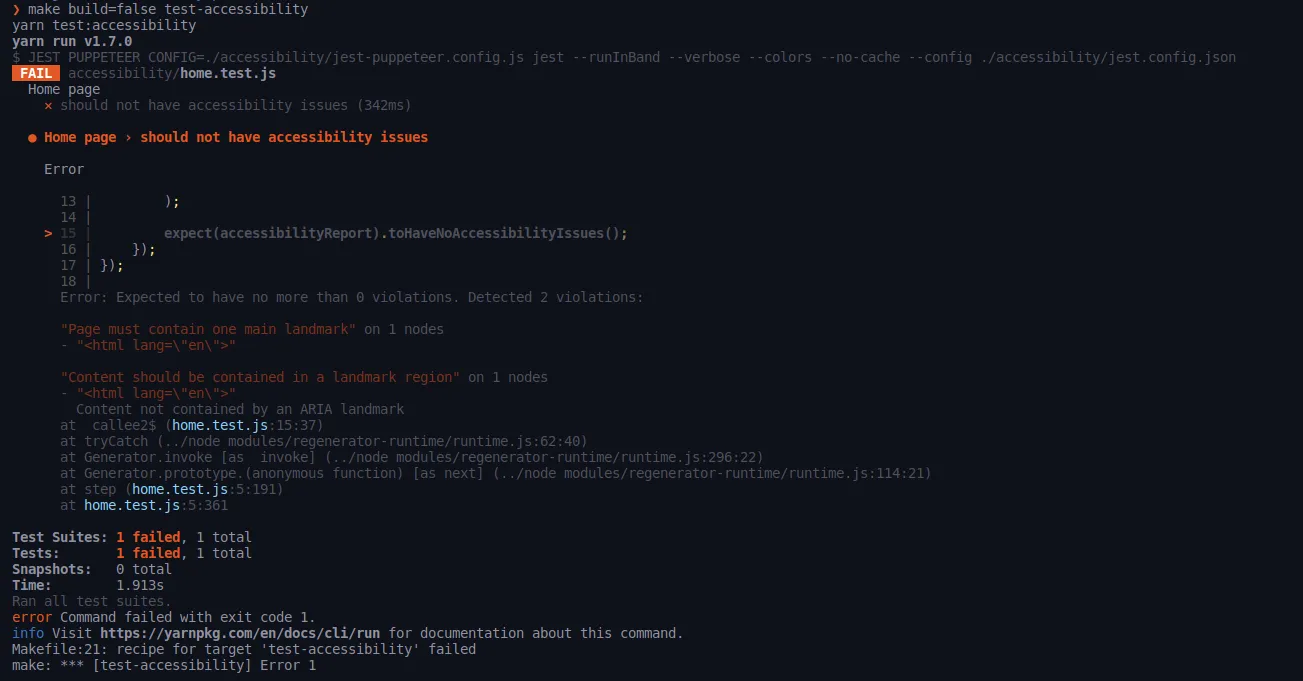

expect(accessibilityReport).toHaveNoAccessibilityIssues(); });});Let’s run it!

Now let’s fix this in ./src/App.js by replacing the p element by a main element:

import React, { Component } from "react";import logo from "./logo.svg";import "./App.css";

class App extends Component { render() { return ( <div className="App"> <header className="App-header"> <Image src={logo} className="App-logo" alt="logo" /> <h1 className="App-title">Welcome to React</h1> </header> <main className="App-intro"> To get started, edit <code>src/App.js</code> and save to reload. </main> </div> ); }}

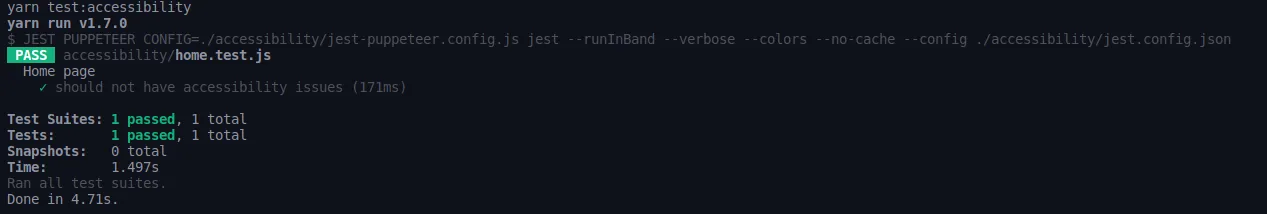

export default App;And if we run the tests again, we now get this:

Time to tackle the performance tests!

Performance Tests With Puppeteer

The first performance metric we are going to check is the time before dom is complete. To simplify assertions, let’s add a toBeInteractiveUnder() helper function to expect:

// in ./accessibililty/performance.jsconst { printExpected, printReceived } = require("jest-matcher-utils");const ms = require("ms");

const POOR_DEVICE = "POOR_DEVICE";const MEDIUM_DEVICE = "MEDIUM_DEVICE";const FAST_DEVICE = "FAST_DEVICE";

exports.POOR_DEVICE = POOR_DEVICE;exports.MEDIUM_DEVICE = MEDIUM_DEVICE;exports.FAST_DEVICE = FAST_DEVICE;

exports.getSimplePagePerformanceMetrics = async (page, url, deviceType) => { const client = await page.target().createCDPSession(); if (deviceType) { await emulateDevice(client, deviceType); } await client.send("Performance.enable");

await page.goto(url, { waitUntil: "domcontentloaded" });

const performanceTiming = JSON.parse( await page.evaluate(() => JSON.stringify(window.performance.timing)), );

// Here are some of the metrics we can get return extractDataFromPerformanceTiming(performanceTiming, [ "requestStart", "responseStart", "responseEnd", "domLoading", "domInteractive", "domContentLoadedEventStart", "domContentLoadedEventEnd", "domComplete", "loadEventStart", "loadEventEnd", ]);};

const emulateDevice = async (client, deviceType) => { switch (deviceType) { case POOR_DEVICE: await client.send("Network.enable");

// Simulate slow network await client.send("Network.emulateNetworkConditions", { offline: false, latency: 200, downloadThroughput: (768 * 1024) / 8, uploadThroughput: (330 * 1024) / 8, });

// Simulate poor CPU await client.send("Emulation.setCPUThrottlingRate", { rate: 6 }); break; case MEDIUM_DEVICE: await client.send("Network.enable");

// Simulate medium network await client.send("Network.emulateNetworkConditions", { offline: false, latency: 200, downloadThroughput: (768 * 1024) / 8, uploadThroughput: (330 * 1024) / 8, });

// Simulate medium CPU await client.send("Emulation.setCPUThrottlingRate", { rate: 4 }); break; case FAST_DEVICE: await client.send("Network.enable");

// Simulate fast network but still in "real" conditions await client.send("Network.emulateNetworkConditions", { offline: false, latency: 28, downloadThroughput: (5 * 1024 * 1024) / 8, uploadThroughput: (1024 * 1024) / 8, }); break; default: console.error(`Profile ${deviceType} is not available`); }};

const extractDataFromPerformanceTiming = (timing, dataNames) => { const navigationStart = timing.navigationStart;

return dataNames.reduce((acc, name) => { acc[name] = timing[name] - navigationStart; return acc; }, {});};

expect.extend({ toBeInteractiveUnder(metrics, time) { const milliseconds = typeof time === "number" ? time : ms(time); return { pass: metrics.domInteractive <= milliseconds, message() { return `Expected page to be interactive under ${printExpected(`${ms(milliseconds)}`)}. It was interactive in ${printReceived(`${ms(metrics.domInteractive)}`)}`; }, }; },});And here are our tests:

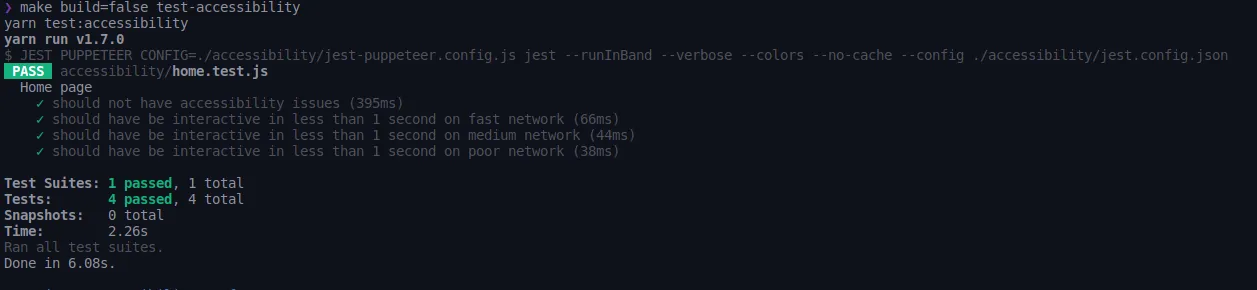

// in ./accessibililty/home.test.js// ...import { FAST_DEVICE, MEDIUM_DEVICE, POOR_DEVICE, getSimplePagePerformanceMetrics,} from "./performances";

describe("Home page", () => { // ... it("should have be interactive in less than 1 second on fast device", async () => { const metrics = await getSimplePagePerformanceMetrics( page, "http://localhost:5000", FAST_DEVICE, ); expect(metrics).toBeInteractiveUnder(1000); });

it("should have be interactive in less than 1 second on medium device", async () => { const metrics = await getSimplePagePerformanceMetrics( page, "http://localhost:5000", MEDIUM_DEVICE, ); expect(metrics).toBeInteractiveUnder(); });

it("should have be interactive in less than 1 second on poor device", async () => { const metrics = await getSimplePagePerformanceMetrics( page, "http://localhost:5000", POOR_DEVICE, ); expect(metrics).toBeInteractiveUnder(1000); });});

Advanced Performance Metrics

You might also be interested by other metrics such as the time to the first meaningful paint. These metrics are not available through the method we used in the previous section. There is another way though, that we implemented in the hasItsFirstMeaningfulPaintUnder() helper.

// in ./accessibililty/performance.js

const getTimeFromPerformanceMetrics = (metrics, name) => metrics.metrics.find((x) => x.name === name).value * 1000;

const extractDataFromPerformanceMetrics = (metrics, dataNames) => { const navigationStart = getTimeFromPerformanceMetrics( metrics, "NavigationStart", );

return dataNames.reduce((acc, name) => { acc[name] = getTimeFromPerformanceMetrics(metrics, name) - navigationStart;

return acc; }, {});};

exports.getDetailedPagePerformanceMetrics = async ( page, url, deviceType = FAST_DEVICE,) => { const client = await page.target().createCDPSession();

await emulateDevice(client, deviceType); await client.send("Performance.enable");

await page.goto(url, { waitUntil: "domcontentloaded" });

const performanceMetrics = await client.send("Performance.getMetrics");

return extractDataFromPerformanceMetrics( performanceMetrics, // Here are some of the metrics we can get [ "DomContentLoaded", "FirstMeaningfulPaint", "LayoutDuration", "NavigationStart", "RecalcStyleDuration", "ScriptDuration", "TaskDuration", ], );};

expect.extend({ // ... hasItsFirstMeaningfulPaintUnder(metrics, time) { const milliseconds = typeof time === "number" ? time : ms(time); return { pass: metrics.FirstMeaningfulPaint <= milliseconds, message() { return `Expected page to have its first meaningful paint under ${printExpected(`${ms(milliseconds)}`)}. It has its first meaningful paint after ${printReceived(`${ms(metrics.domInteractive)}`)}`; }, }; },});And we can use them in our tests like this:

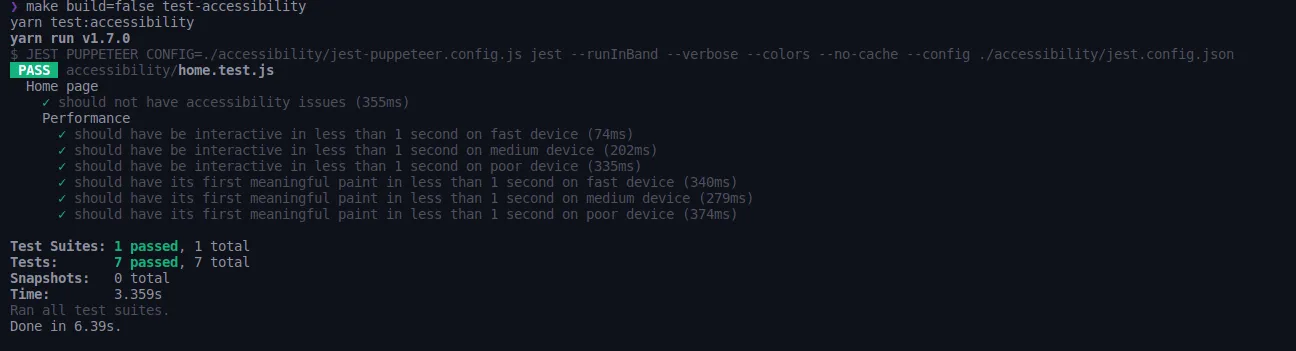

// in ./accessibililty/home.test.jsimport { FAST_DEVICE, MEDIUM_DEVICE, POOR_DEVICE, getSimplePagePerformanceMetrics, getDetailedPagePerformanceMetrics,} from "./performances";

describe("Home page", () => { // ... it("should have its first meaningful paint in less than 1 second on fast device", async () => { const metrics = await getDetailedPagePerformanceMetrics( page, "http://localhost:5000", FAST_DEVICE, ); expect(metrics).hasItsFirstMeaningfulPaintUnder(500); });

it("should have its first meaningful paint in less than 1 second on medium device", async () => { const metrics = await getDetailedPagePerformanceMetrics( page, "http://localhost:5000", MEDIUM_DEVICE, ); expect(metrics).hasItsFirstMeaningfulPaintUnder(500); });

it("should have its first meaningful paint in less than 1 second on poor device", async () => { const metrics = await getDetailedPagePerformanceMetrics( page, "http://localhost:5000", POOR_DEVICE, ); expect(metrics).hasItsFirstMeaningfulPaintUnder(500); });});

Configuring The Continous Integration Server

The last thing we need to do is to configure our Continuous Integration server, Travis, to run these tests:

# in .travis.ymllanguage: node_jsnode_js: - "10"# This addon ensure we run the latest stable chrome which support headless modeaddons: chrome: stablescript: - make testcache: directories: - node_modulesConclusion

I hope this process will help you enforce accessibility in your apps. We found it very useful while adding features to our applications as you can be warned pretty soon when something has not been optimized and has impacted the application performance or its accessibility.

However, do not assume that your application is fully accessible because your accessibility tests are all green. Axe-core is an amazing library but it simply cannot identify some accessibility shortcoming. Make sure you use libraries which can help you make your application accessible such as reach router, downshift and react-modal among others.

You can find the example application in this repository: https://github.com/marmelab/puppeteer-accessibility

Authors

Full-stack web developer at marmelab, Gildas has a strong appetite for emerging technologies. If you want an informed opinion on a new library, ask him, he's probably used it on a real project already.

Full-stack web developer at marmelab. Alexis worked on so many websites that he's a great software architect. He also loves horse riding.