Load Testing Node.js App with Flood.io

For one of our recent projects, we needed to make sure that the web application we built was able to handle a heavy load, in anticipation of a traffic peak.

Here is how we did it, and what we learned in the process.

Load testing vs. Stress testing

The ultimate goal of a stress test is to see how much traffic an application can handle. It measures the upper limit from which the application stops functioning properly. Usually, it is done using a pool of virtual users that perform canned actions, and that pool grows until the product breaks.

The load test is similar in its form, but its objective is to prove that the product is able to support a given amount of load for a given time. It’s better to run this test on the whole application in order to bring all bottlenecks to light.

Load testing is a great way to grab insights about how your application runs under heavy load, how all services interact, and to plan production capacity accordingly.

Test Scenario And Setup

Before unleashing an army of gremlins to our new shiny app, let’s take a step back.

First, we need to define objectives based on the real usage of the product. For an application already in production, the objective can be a slightly higher requests per minute (rpm) rate than the maximum ever seen on production. Or, if the Marketing team sends a large mailing, the objective can be calculated according to the number of recipients times the expected opening rate.

In our case, we built a product from scratch to replace an existing one. The logs of that legacy product were highly valuable, allowing us to set clear objectives.

Next, we had to setup a test environment that matches the production architecture. It’s important to include related services if possible:

- Does your app runs behind a proxy? Include it.

- Does it use a third-party service to send mail? Plug it.

- How about that legacy protocol that you bypass on dev? Use this one, too.

Any part of your product can break within the specific conditions you are using, and it’s important to catch it if it’s the case.

Also, write a scenario based on what users will actually do on your product, expecting the worst case.

Our product workflow was dead simple:

- Log into the app using a well known SSO service

- Seek for a particular HTML element on the front page

- Upload a file on a drop zone

- Leave the app

These simple steps would trigger a complex chain of events compiling informations for power users, who have to manage thousands of users.

Hence, our test scenario included the worst case: each user is registered to as many groups as possible, and uploads the biggest file possible (5MB).

Flood.io

There are several load and stress test tool out there. We started to look for a SaaS product that would help us launch the test as quickly as possible.

After some search, flood.io draw our attention because of its many advantages.

Its principle is simple: write a script with the language you already use for your E2E tests (Selenium, Gatling), or using their own scripting language called Element, based on Typescript.

Include that script in a small form to configure the number of virtual users that will run it, distribute them among a set of servers, and watch the results.

The pricing is based on the hourly server usage, and the servers runs on Amazon Web Services (AWS), which lets you run these servers anywhere in the world.

It’s also possible to run Element on your own host by installing it locally:

npm install @flood/element-cli./node_modules/.bin/element run scenario.tsExpectations and Results

The architecture of the application we tested is pretty common and composed of multiple layers:

- Authentication proxy

- Single Page Application (SPA)

- Node.js Web server, that serves the SPA and the API

- PostgreSQL database

- Redis + Workers, that run costly processes asynchronously

- A few third-party services

Our objective was that a thousand users should be able to upload ~2000 documents in 20 minutes, within our highest predictions. That’s 100 uploads per minute at highest traffic peak.

We were pretty confident that our small web server would take the load, without taking that heavy processing would be handled by the workers. And we wanted to know if the third-party services would hold on.

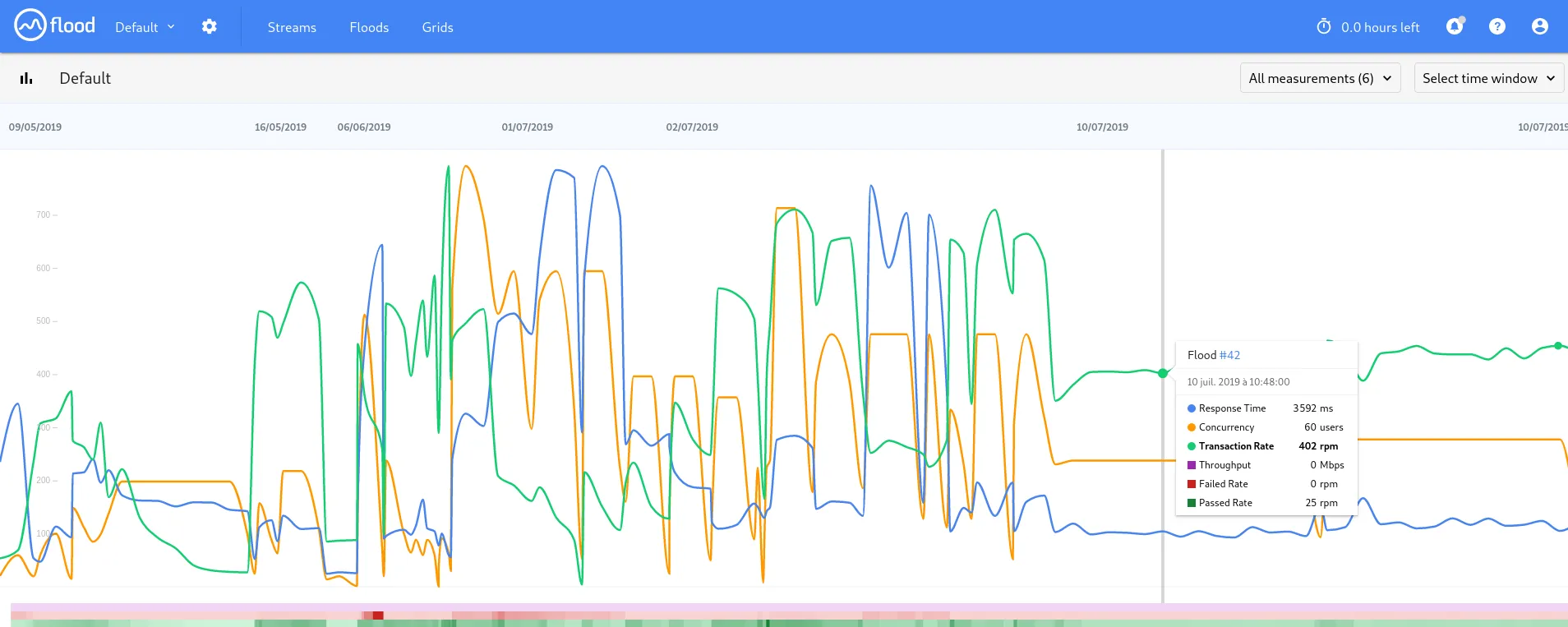

So, we ran small tests with a few users at first, to check our script, and gradually increased the load.

The results were astonishing. Contrary to our expectations, the third-party services didn’t bat an eyelid. On the other hand, the memory consumption of the web server AND of our worker weren’t freed. So, after a few tests, the server crashed because of memory exhaustion.

After fixing the memory leak and a few small bugs we found, we ran the test again and everything was clear.

Finally, we confirmed that the product we built was able to handle the load. We were also able to plan the capacity of production servers. We managed to help system admins to be more confident with the tools we choose, and to install a fully-featured monitoring suite.

Conclusion

Load testing is a powerful tool when delivering a new product, making every stakeholder more confident, and definitely helps make an app ready for the production goals.

Authors

Full-stack web developer at marmelab, Kevin also shows impressive sysadmin skills, and a passion for obscure cryptocurrencies. Oh, and don't challenge him at fussbal, unless you're a champion.