Using ESI elements with Next.js

Recently, we migrated a React site based on a homemade SSR system to Next.js. And we don’t regret this choice as the developer experience is conclusive while bringing a performance gain and freeing us from the difficulties of upgrading from the old stack.

But we were still faced with a problem.

Our site depends on an API that returns all the content of a page in a single call. Next’s SSR system is perfect for optimizing this single call as we have a Varnish managing the cache of an entire page. But we have a second piece of content to display, which requires another API call: the site footer. This footer is subject to change because it is editable through a private interface. But the footer changes much less regularly than the content of a page. Finally, this footer is not contextual: it is the same across the entire site, regardless of the page.

The question was: how to avoid calling the API route of this footer at each page generation?

Caching The API Call

The first obvious solution is to cache this call to the footer API route on the Next server.

This is certainly a very good, well-tested, and proven solution. But we kept it as a second option because, during our discussions on this problem, we had formulated this wish:

It would have been great if Next could render a specific component with a different cache than the page that calls it. A kind of ESI of the SSR.

ESI, or Edge Side Includes, let a CDN or a reverse proxy compose pages from different HTML fragments, each with their own cache duration.

And there is a component for using ESI in React: react-esi

Setting Up react-esi

The project documentation is very clear, and setting up an ESI compatible footer is not a problem.

Let’s consider that our site uses a layout allowing the footer to be included on all pages.

// in src/componants/Layout.js

import withESI from "react-esi";import Footer from "./Footer";import styles from "../styles/Home.module.css";

// The second parameter is an unique ID identifying this fragment.const FooterESI = withESI(Footer, "Footer");

const Layout = ({ children }) => { return ( <div className={styles.container}> {children} <FooterESI repo="react-esi" /> </div> );};

export default Layout;// in src/componants/Footer.js

class Footer extends React.Component { render() { return ( <footer> The react-esi repository has{" "} <span>{this.props.data ? this.props.data.stargazers_count : "--"}</span>{" "} stars on Github. </footer> ); }

static async getInitialProps({ props, req, res }) { return fetch(`https://api.github.com/repos/dunglas/${props.repo}`) .then((fetchResponse) => { return fetchResponse.json(); }) .then((json) => { if (res) { res.set("Cache-Control", "s-maxage=60, max-age=30"); } return { ...props, data: json, }; }); }}

export default Footer;This is the time to make the first remark: reac-esi does not use a hook but a good old Higher-Order Component (HOC) and a React class. So it’s probably not trendy, but it’s still a nice pattern. For the more worried among us, let’s remember what the React documentation says:

TLDR: There are no plans to remove classes from React.

The front part is complete. But as we’ll see in the next part of this article, for a component to be ESI-cached, a specific endpoint must also be set up to render that component independently of the rest of the page.

This explains the if (res) in the Footer class: in the case where the component is called in isolation (and as it will be a specific call, we will have access to a req request and a res response objects), we will be able to declare the cache time for that component via the standard HTTP cache header Cache-Control.

To implement this new route, we will have to update the server part of Next with a server.js file:

// in src/server.js

const express = require("express");const next = require("next");const { path, serveFragment } = require("react-esi/lib/server");

const dev = process.env.NODE_ENV === "development";

const app = next({ dev });const handle = app.getRequestHandler();const port = 3000;

app.prepare().then(() => { const server = express(); server.get(path, (req, res) => serveFragment( req, res, (fragmentID) => require(`./components/${fragmentID}`).default, ), );

server.all("*", (req, res) => { return handle(req, res); });

server.listen(port, (err) => { if (err) throw err; console.log(`> Ready on http://localhost:${port}`); });});The serveFragment middleware from react-esi will handle this new path. By default, this endpoint will be visible on /_fragment but it can be configured with a REACT_ESI_PATH environment variable.

Warning: For these changes to take effect, you must no longer run the development application with a

next dev, but with anode src/server.js!

There is one last little thing to do: Add the HTTP Surrogate-Control header declaring that our response includes ESI. We can add this header into the server.js file or add them to the Next configuration file:

module.exports = { async headers() { return [ { source: "/", headers: [ { key: "Surrogate-Control", value: 'content="ESI/1.0"', }, ], }, ]; },};Going To Production

The first thing to do to get a production environment is to build our application. And this is where the use of react-esi makes us leave the idiomatic Next.js.

The build of Next.js involves transpiling and bundling (via Webpack) the code of the client application. However, for the /_fragment endpoint to work, the server will need the called component’s code to be isolated (i.e. outside of the classic Next chunks) and transpiled! We must add a Babel transpilation step to our build task.

// in package.json{ "name": "react-esi", "version": "0.1.0", "private": true, "scripts": { "dev": "node src/server.js", "build": "next build", "postbuild": "babel src -d dist", "start": "node dist/server.js" }}**Second warning: For these changes to take effect, you must no longer launch the production application with a next start, but with a node dist/server.js!

There is one last point to deal with before testing the production version: Setting up an HTTP cache server in front of the Next.js server, for example, a Varnish. The easiest solution on a development workstation is to use Docker and Docker Compose. You will find an example of configuration on the Github repository of this article.

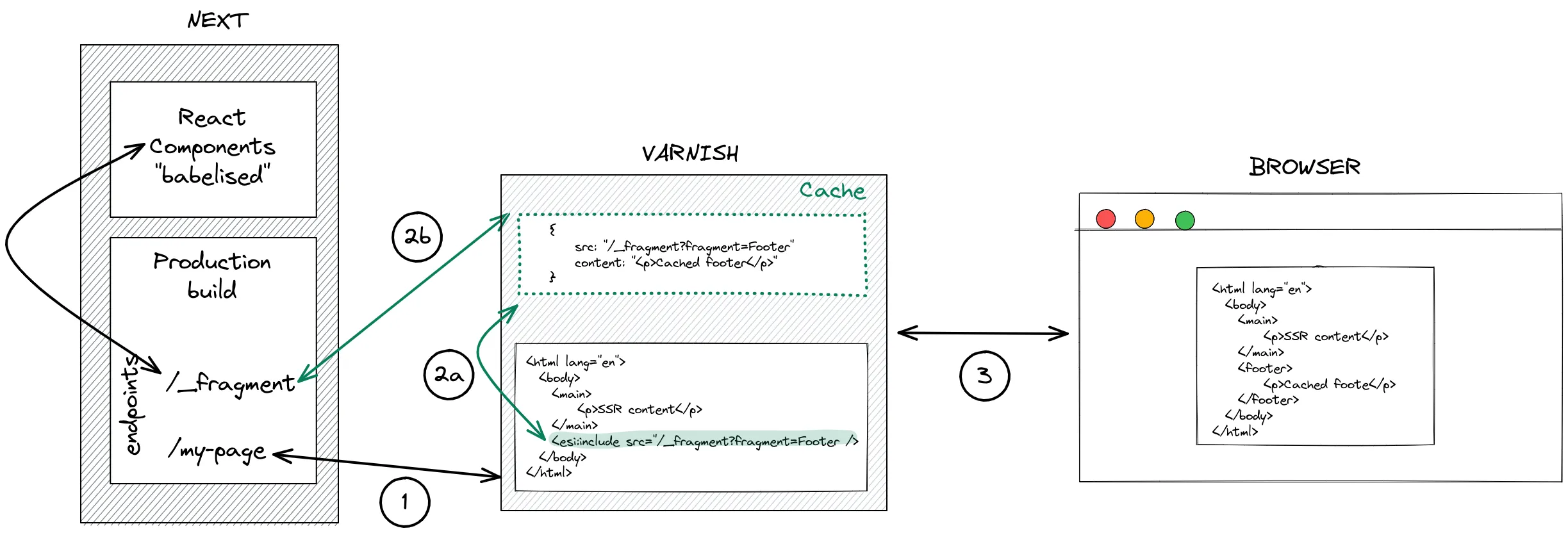

And now, here is a schematic of how our cached footer implementation works:

(1) The Next server will not return the rendering of the Footer component but an ESI element:

<div> <esi:include src="/_fragment?fragment=Footer&props={"repo":"react-esi"}&sign=9aa38503cb866bfb53f4c5ba1a7b136f19d25fde20d3d4ba13ebea1" /></div>The analysis of this ESI element calls for two comments.

Firstly, we can see that we call the API route by naming the component we want to render /_fragment?fragment=Footer but that we also pass the props of that component &props={"repo": "react-esi"}. This is very useful! Let’s suppose that our footer is internationalized. In this case, we would have a locale property allowing us to have a different cache of our footer per locale.

Then we see that the call to the ESI fragment rendering api is including a signature token &sign=9aa38503cb866bfb53f4c5ba1a7b136f19d25fde20d3d4ba13ebea1 in order to secure this API route.

(2a) Varnish checks to see if it has the fragment cached. If so, it uses this cache to replace the ESI tag.

(2b) If Varnish does not have the fragment cached, it calls the Next.js server on the endpoint set in the src of the element. This endpoint returns the HTML rendering of the component identified by the fragment request parameter. Varnish caches this portion of HTML and uses it to replace the ESI tag.

(3) Varnish returns the final HTML page to the client

<div> <script> window.__REACT_ESI__ = window.__REACT_ESI__ || {}; window.__REACT_ESI__["Footer"] = { repo: "react-esi", data: { stargazers_count: 572 }, }; document.currentScript.remove(); </script> <footer style="width:100%;height:100px;border-top:1px solid #eaeaea;text-align:center;padding-top:2rem" > The react-esi repository has <span style="font-weight:bold;margin:O 5px">572</span> stars on Github. </footer></div>Is Varnish Becoming Mandatory In Development?

If Varnish is not present to render the ESI element, are we doomed to develop the ESI element content blindly?

Of course not. If the ESI tag reaches the browser, it’s the JavaScript that will render the component code. In our example, the call to the Github API will be made from the browser.

But it is quite simple to set up a Varnish server into a development environment with Docker. And even if Varnish is not used for development, it’s still very practical and comforting to have an environment allowing to test the production build before sending it … in production.

You can find an example of Docker Compose configuration into the react-esi-demo repository. By the way, feel free to use this repository to try out ESI fragments by yourself!

Conclusion

It is clear that the use of ESI elements perfectly meets the initial problem.

The solution of caching the API call on the server-side would have probably also met the need. And perhaps when the React Server Components will be released from their experimental phase, they will be natively supported by Next.js and will be able to offer an idiomatic Next.js answer for this functionality.

But until then, the use of ESI is bloody efficient. I really like the fact that it’s not based on some new JavaScript framework but on good old HTTP (the ESI 1.0 specification dates from 2001, Varnish from 2006). It almost feels like a low web :)

Joking aside, I sometimes feel that some new web stuff is trying to re-invent the wheel, rather than making the best of what exists. But Kevin Dunglas, the author of the react-esi component, is one of the people I know who is doing the best job of using all that exists while continuing to innovate. I suggest you take a look at his presentation of Vulcan for another example. And for that, a special thanks to him.

Authors

Full-stack web developer at marmelab. Alexis worked on so many websites that he's a great software architect. He also loves horse riding.