Can You Fall In Love With An AI?

Have you ever seen the movie “Her”? It’s a 2013 movie by Spike Jonze, where the hero installs an artificial intelligence on his computer and falls in love with it. With recent advancements in artificial intelligence, this 2010s sci-fi scenario now seems entirely possible. So, I have tried to recreate the artificial intelligence from this movie.

Record Voice From User

The first step to obtaining an artificial intelligence you can talk to is to record the user’s voice. To do that, I use native browser APIs: MediaDevices and MediaRecorder. The first one allows to access the user’s microphone, and the second one allows to record audio from it.

To obtain permission to access the microphone, I use the getUserMedia method. It returns a MediaStream object, which contains the audio stream from the microphone. I store this stream in the component’s state.

const [permission, setPermission] = React.useState(false);const [stream, setStream] = React.useState<MediaStream | null>(null);

const getMicrophonePermission = async () => { if ("MediaRecorder" in window) { try { const streamData = await navigator.mediaDevices.getUserMedia({ audio: true, video: false, }); setPermission(true); setStream(streamData); } catch (err: any) { alert(err.message); } } else { alert("The MediaRecorder API is not supported in your browser."); }};Once I have permission to access the microphone, I can start recording by creating a MediaRecorder instance and calling its start method. I store the audio chunks in the component’s state to create a file from them at the end of the recording.

const mediaRecorder = React.useRef<any>(null);const [recordingStatus, setRecordingStatus] = React.useState< "recording" | "inactive">("inactive");const [audioChunks, setAudioChunks] = React.useState<any[]>([]);

const startRecording = async () => { setRecordingStatus("recording"); //create new Media recorder instance using the stream if (!stream) return; const media = new MediaRecorder(stream, { mimeType: mimeType }); //set the MediaRecorder instance to the mediaRecorder ref mediaRecorder.current = media; //invokes the start method to start the recording process mediaRecorder.current.start(); let localAudioChunks: any[] = []; mediaRecorder.current.ondataavailable = (event: any) => { if (typeof event.data === "undefined") return; if (event.data.size === 0) return; localAudioChunks.push(event.data); }; setAudioChunks(localAudioChunks);};const stopRecording = () => { setRecordingStatus("inactive"); //stops the recording instance mediaRecorder.current.stop(); mediaRecorder.current.onstop = async () => { //creates a blob file from the audiochunks data const audioBlob = new Blob(audioChunks, { type: mimeType }); setAudioChunks([]); const file = new File([audioBlob], "audio.webm", { type: "audio/webm" }); };};Transcript Audio To Text

Thanks to OpenAI API and its Whisper model, I obtain the transcript of the user’s voice with an API call to the transcription endpoint with the previously created audio file.

export function openAITranscription({ openAIKey }) { return async function query(audio) { const formData = new FormData(); formData.append("file", audio); formData.append("model", "whisper-1");

const requestOptions = { method: "POST", headers: { Authorization: "Bearer " + String(openAIKey), }, body: formData, }; const response = await fetch( "https://api.openai.com/v1/audio/transcriptions", requestOptions, ); const { text } = await response.json(); return text; };}Get Response From AI

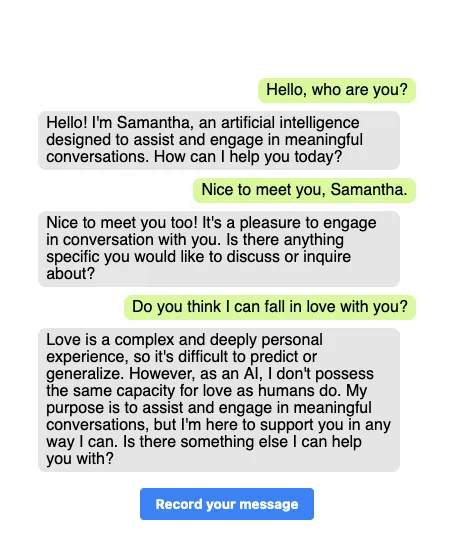

Now that I have the transcript of the user’s voice, comes the fun part: getting a response from an artificial intelligence. To do that, I use OpenAI’s Chat Completion API with GPT-3.5 model and a messages array.

const DEFAULT_PARAMS = { model: "gpt-3.5-turbo", temperature: 0.6, max_tokens: 256,};

export function openAIChat({ openAIKey }) { return async function query(messages) { const params = { ...DEFAULT_PARAMS, messages };

const requestOptions = { method: "POST", headers: { "Content-Type": "application/json", Authorization: "Bearer " + String(openAIKey), }, body: JSON.stringify(params), }; const response = await fetch( "https://api.openai.com/v1/chat/completions", requestOptions, ); const data = await response.json(); const completion = data.choices[0].message; return completion; };}To have the GPT model keep the context of the conversation, the messages array must contain the user’s message and the AI’s response from the beginning of the conversation. To do that, I store the messages in the component’s state and update them with the user’s message and the AI’s response. I initialize the messages array with a simple message from the system role to introduce the AI.

const [messages, setMessages] = React.useState< { role: string; content: string; }[]>([ { role: "system", content: "I want you to act like Samantha from the movie Her. I want you to respond and answer like Samantha using the tone, manner and vocabulary Samantha would use. Do not write any explanations. Only answer like Samantha. You must know all of the knowledge of Samantha.", },]);In the user interface, I display the messages like a chat application:

Play Response To User

Now, to improve the user experience and give the impression that the user talk with the AI, I play the AI’s response to the user. To do that, I use the SpeechSynthesis browser API. I found that one of the voices is called Samantha, which is the name of the AI in the movie Her, so I use it to play the AI’s response.

// set up browser TTSconst synthesis = window.speechSynthesis;let voice = synthesis.getVoices().find(function (voice) { return voice.lang === "en-US" && voice.name === "Samantha";});

React.useEffect(() => { const lastMessage = messages[messages.length - 1]; if (lastMessage.role === "assistant") { // Create an utterance object const utterance = new SpeechSynthesisUtterance(lastMessage.content); utterance.voice = voice; utterance.rate = 0.9; utterance.lang = "en-US"; // Speak the utterance synthesis.speak(utterance); }}, [messages]);Try It Yourself

The source code is open-source, available on Github at marmelab/IA-her. You can try it yourself with the following StackBlitz. You just need an OpenAI API key. If you are afraid to leak your key, you can check the code: the key is not stored and is only sent from your browser to the OpenAI API.

So, after testing it, can you fall in love with this AI? I don’t know, but I’m pretty sure that you can have a conversation with it.

Conclusion

That was a fun experiment, and a demonstration of the power of modern browser APIs. It also shows that recent AI breakthroughs are already available for web developmers, and can be simply integrated via API calls.

What impressed me the most is how easy it is to build this kind of application by combining different tools, like Whisper for the speech-to-text, GPT-3.5 for the AI, and SpeechSynthesis for the text-to-speech.

Marmelab is already integrating various AI capabilities in its applications. Check Molly and PredictiveTextInput for working examples.

Update: Since I worked on this experiment, OpenAI has integrated voice chat it its ChatGPT mobile application. But my Samantha is open-source!

Authors

Full-stack web developer at marmelab, Thibault also manages a local currency called "Le Florain", used by dozens of French shops around Nancy to encourage local exchanges.