Adding Voice Recognition To A Web App

How to build an AI assistant that supports voice recognition? In this article, we will explain how we added speech-to-text support on an existing app.

We performed audio speech recognition at the edge using the Whisper model from OpenAI. This article will also explain how we recorded the user’s microphone using the MediaStream Recording API provided by all major browsers.

This is the second part of a series about using modern tooling to build an AI assistant. The first article focuses on Text-to-Text generation: Building An AI Assistant at the Edge.

As a reminder, the objective of this series is to build a simple Aqua clone using Cloudflare Workers AI and Vue 3. The source code of the project is available on our GitHub at marmelab/cloudflare-ai-assistant.

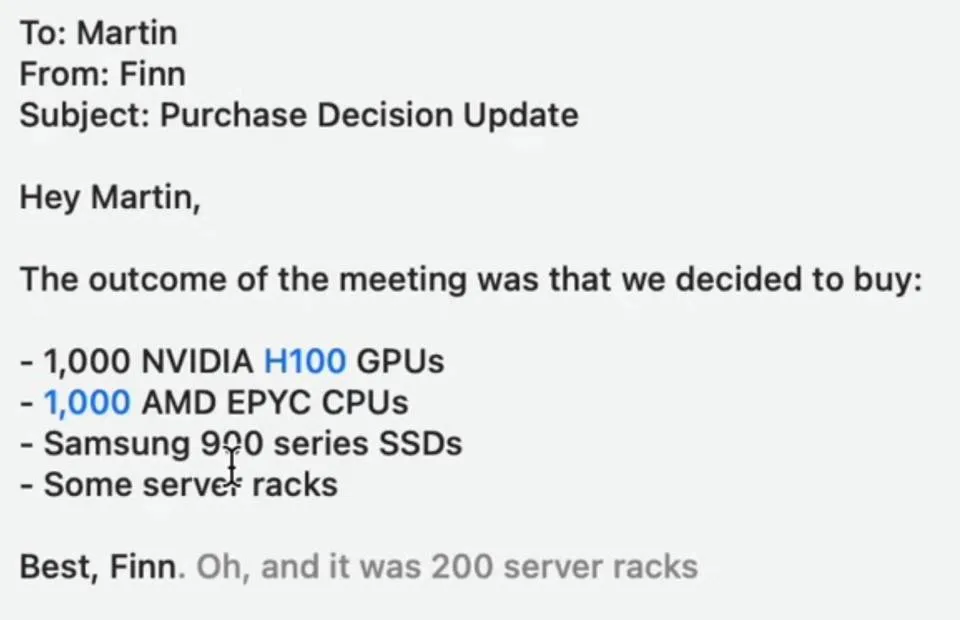

The result of our work is a simple AI assistant that can listen to the user’s voice and transcribe it into a prompt for a text editor:

Speech-to-Text using Cloudflare AI SDK

The Cloudflare Workers AI toolkit provides access to the Whisper voice recognition model from OpenAI. The model takes an audio file as input and returns the recognized voice as a text string. The model supports multiple audio formats, such as MP3, MP4, WAV or WebM.

An example of an API endpoint for the Nuxt framework that does speech-to-text inference is available below.

import { Ai } from "@cloudflare/ai";

export default defineEventHandler(async (event) => { const cloudflareBindings = event.context?.cloudflare?.env; if (!cloudflareBindings) { throw new Error("No Cloudflare bindings found."); }

setResponseHeaders(event, { "content-type": "application/json", "cache-control": "no-cache", });

const body = await readRawBody(event, false);

if (!body) { setResponseStatus(event, 400); return JSON.stringify({ status: 400, errors: [{ type: "required" }], }); }

const ai = new Ai(cloudflareBindings.AI);

const response = await ai.run("@cf/openai/whisper", { audio: [...body], });

return { text: response.text, };});The Cloudflare AI API takes the raw audio byte array as input and returns the word count with the inferred words. The Cloudflare endpoint does not support bi-directional streaming, which would have been a must-have for our user case. Indeed, if we wanted real-time audio recognition, this would have made our task easier.

Speech-to-Text In The Browser

Another option we considered for speech recognition was the Web Speech API, built into modern browsers. This experimental feature provides a high-level framework for speech-to-text. The SpeechRecognition interface is especially interesting for us as it can stream recognized text on the fly.

However, this API is only available behind the webkit vendor prefix on Chrome and has not been implemented yet by Firefox. Furthermore, the model depends on the browser vendor and the quality of the results varies greatly. Finally, we wanted to test the Whisper model provided with Cloudflare Workers AI.

Calling the API Endpoint from the Front-end

Nowadays, all major browsers support the MediaStream Recording API. This low-level API supports capturing video and/or audio from the user device directly in the browser. This was especially interesting in our use case, as we want to record user’s prompts from their microphone.

Here is an example of how the user microphone can be recorded using JavaScript / TypeScript:

// We first get the audio stream. If the user had never visited the app before, the browser will ask for their permission.const stream = await navigator.mediaDevices.getUserMedia({ audio: true,});

// We then create a MediaRecorderconst mediaRecorder = new MediaRecorder(stream, { mimeType: "video/webm; codecs=vp9",});

// We receive audio chunksconst recordedChunks = [];mediaRecorder.addEventListener("dataavailable", (event) => { recordedChunks.push(event.data);});

mediaRecorder.addEventListener("stop", (event) => { // We flatten all audio chunks into a single audio file const audio = new Blob(chunks, { type: mediaRecorder.mimetype }); // Do something with audio});

mediaRecorder.start();

// We stop recording after 10 seconds for examplesetTimeout((event) => { mediaRecorder.stop();}, 10000);While this feature is great, we found that every browser has a different set of supported audio mime types. As there is no standard regarding the audio format, these sets do not intersect across browsers: Chrome supports WebM and Safari supports MP3 for recording. As we are building a Proof of Concept, we only used WebM format with Chrome in the rest of this article to simplify the code.

During our development, we also learned the hard way that the Blob must have the same mime type as the MediaRecorder. If they are not the same, the audio could not be transcribed by Whisper.

A Vue Composable for Voice Input

The low-level nature of MediaStream Recording API makes it agnostic, hence, it can be integrated as a Vue composable. This composable is responsible for abstracting away all the difficulties from the API.

The composable provides access to the microphone state:

microphoneDisabledis true if the user has not granted access to their microphone;recordingis true when the microphone is on and recording the user’s prompt;startRecordingthat initializes the media recorders and its event listeners;stopRecordingthat stops the media recorder and cleans up the internal state.

Furthermore, the composable is also in charge of transcribing the audio once recorded. It calls the API we implemented in the first part, and returns the following state:

loadingis true when the audio is currently transcribing;recordedTextthat holds the last transcribed text.

The complete useRecorder() composable is available below:

import { onBeforeUnmount, ref } from "vue";

export default function useRecorder() { // Private API const stream = ref<MediaStream | null>(null); const recorder = ref<MediaRecorder | null>(null);

// Public API const microphoneDisabled = ref<boolean>(false); const recording = ref<boolean>(false); const loading = ref<boolean>(false); const recordedText = ref<string>("");

const stopRecording = async () => { recording.value = false; recorder.value?.stop?.();

const tracks = stream?.value?.getTracks() ?? []; for (const track of tracks) { track.stop(); }

recorder.value = null; stream.value = null; };

const startRecording = async () => { microphoneDisabled.value = false;

try { stream.value = await navigator.mediaDevices.getUserMedia({ audio: true, });

recording.value = true;

let chunks: BlobPart[] = []; recorder.value = new MediaRecorder(stream.value, { mimeType: "audio/webm", });

recorder.value.addEventListener("dataavailable", function (e) { chunks.push(e.data); });

recorder.value.addEventListener("stop", function () { const blob = new Blob(chunks, { type: "audio/webm" });

loading.value = true; fetch("/api/voice", { method: "POST", body: blob, }) .then(async (response) => { if (response.status !== 200 || !response.body) { return; }

const { text } = (await response.json()) as { text: string; }; if (!text) { return; }

recordedText.value = text; }) .finally(function () { loading.value = false; }); });

recorder.value.start(); } catch (e) { console.error("Encountered error while requesting audio", e); microphoneDisabled.value = true; stopRecording(); } };

onBeforeUnmount(stopRecording);

return { microphoneDisabled, recording, loading, recordedText, startRecording, stopRecording, };}Putting it All Together

Now that our useRecorder composable has been set up, we can use it from a Vue component. To trigger the startRecording and stopRecording, we relied on pointerdown and pointerup events respectively on a microphone button. An example of how to use the composable is available below.

<script setup lang="ts"> import { watch } from "vue"; import useRecorder from "~/composables/useRecorder.js";

const props = defineProps<{ onRecordedText: (text: string) => void; }>();

const { microphoneDisabled, loading, recording, recordedText, startRecording, stopRecording, } = useRecorder();

watch(recordedText, (newRecordedText) => { if (!newRecordedText) { return; }

props.onRecordedText(newRecordedText); });

const handleMouseDown = (e: MouseEvent | TouchEvent) => { e.preventDefault(); startRecording(); };

const handlePointerDown = (e: MouseEvent | TouchEvent) => { e.preventDefault(); stopRecording(); };

const handleContextMenu = (e: MouseEvent | TouchEvent) => { e.preventDefault(); };</script>

<template> <button @contextmenu="handleContextMenu" @pointerdown="handlePointerDown" @pointerup="handlePointerUp" :disabled="microphoneDisabled" type="button" :class="`btn btn-circle ${recording ? 'btn-success' : 'btn-primary'}`" > <span class="loading loading-spinner" v-if="loading" /> <MicrophoneIcon v-else /> </button></template>To avoid loss of information, we chose to display the microphone button only if the user did not type any prompt on the text field. Otherwise, the recorded text would supersede the user prompt.

Results, Limitations, and Future Directions

We tested the speech-to-text API with various prompts and found that the Whisper model is great even if we are not native English speakers. We must conduct a more detailed evaluation of the model performance with various prompts in different languages.

Since latency is a concern, we noticed that speech-to-text evaluation at the edge does not provide a significant advantage here. The voice detection is still the bottleneck and can take up to a few seconds.

Regarding our application, it misses some improvements to make it a better AI assistant. Firstly, the on-the-fly recognition while the microphone button is on has not been developed yet. To perform this, we could have used either the SpeechRecognition API or a VAD library such as the ones provided by ricky0123. An example of a successful implementation of the latter is the Swift AI Assistant.

Secondly, we replace the previous text with the new one after each LLM call. The user has to spot the differences by himself and read the whole text to check the changes. A solution for this problem could be the implementation of a diffing algorithm to highlight the changes on the generated text.

Conclusion

Relying on Cloudflare Workers AI, we built a simple Aqua in 3 developers’ day. Their API abstracts away most of the difficulties of both text-to-text and speech-to-text tasks. Even if we faced some challenges such as timeout for text generation or invalid mime types for audio synthesis, the overall developer experience was great.

Regarding the performance of the provided models, we are impressed with how well Whisper and Llama 3 models perform overall. While we face some missed detections, especially on Speech-to-Text tasks as we are not native English speakers, we would definitely use them again in future projects.

Authors

Full-stack web developer at marmelab, Jonathan likes to cook and do photography on his spare time.

Full-stack web developer at marmelab, Anthony seeks to improve and learn every day. He likes basketball, motorsports and is a big Harry Potter fan