DotAI 2018: Machine Learning for Humans

A few weeks ago, I attended DotAI 2018 in Paris. It was not the first time I attended a Dot conference. Yet, it was my first appearance in a machine learning event. I was afraid of the conference level. Stumbling upon various blog posts about this topic often leads to headaches due to high-level maths everywhere.

Fortunately, almost all talks avoided rushing into pure technique. And today, I am more motivated than ever to bootstrap my machine learning skills. And with the help of other attendees, I now have some clues on how to start this long (but apparently not so hard) journey!

I’ve already attended several Dot conferences in the past, and each time I was disappointed, especially by the JS ones. I didn’t learn a lot or was completely drown into over-technical talks. But this time, that was an awesome afternoon, full of inspiring talks. Here is my feedback.

Machine Learning (ML) on Code

vmarkovtsev.github.io might track you and we would rather have your consent before loading this.

Use left and right arrows to navigate through these slides.

Let’s consider I write a get function in my favorite IDE. If I start naming another function with an s, what would probably be its name? If you said set, either you are a human developer or a machine trained by Vadim Markovtsev.

Vadim explained to us how to anticipate the next inputs of a developer using machine learning. Taking all open-source GitHub repositories as a reference (big data you said?), he did a vectorial analysis of their code.

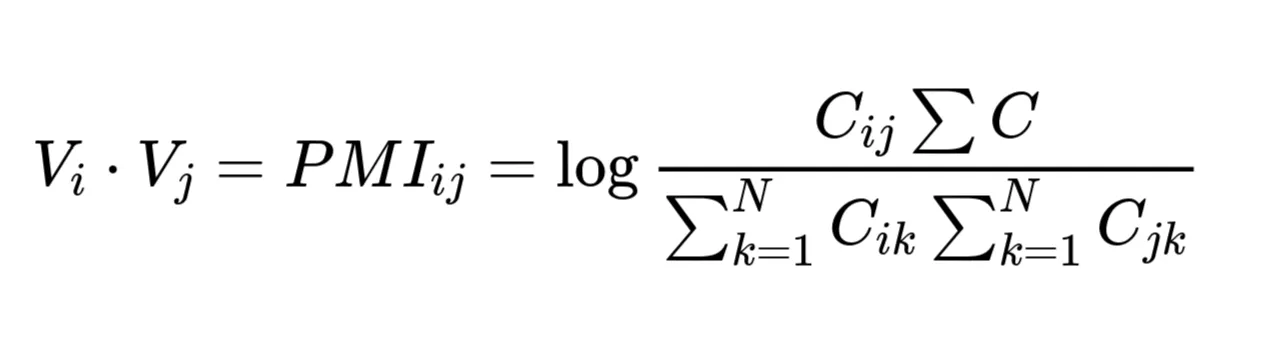

He first normalizes code, splitting it into words (connectDatabase to ['connect', 'database']) and merging similar words together (connection with connect). He then computes a co-occurence matrix.

The co-occurrence matrix is a matrix giving the number of times each term is present along with another one. If we spot a get in the code, there are high chances to spot a set nearby. Hence, the coefficient value of the [get, set] term would be quite high. This value is called the Pointwise Mutual Information and is measured by a complicated formula using some logarithms.

Once the co-occurrence matrix is computed, Vadim applies a Stochastic Gradient Descent to it. What is this? Well… Something that produces nice charts? Charts like…

Computing such a matrix consumes massive computer resources. Applying it on all GitHub repositories is then not possible. Instead, Vadim split this huge work into much smaller pieces, gathering all their outputs using back propagation. Computing these matrices on several data pieces and gathering them back is a common need for data scientists. That’s the way the Swivel Algorithm works. Vadim and his team reimplemented it with TensorFlow, and open-sourced it: tensorflow-swivel.

All this work allows IDEs to predict what we want to type (set after get), or to fix typos (gti instead of git).

What I Thought

That was a really interesting talk. Vadim gave several technical terms without expanding them. That was a perfect balance: I didn’t feel lost and have a list of subjects for further study.

Traps to avoid when working with AI

YouTube might track you and we would rather have your consent before loading this video.

How can we detect a fault on a production line? First, the naive answer is generally using convolutional networks to classify products as “good” or “bad”. Virginie Mathivet did the test. At first attempt, she already had an impressive reliability of 99.1%! Convolutional networks are the future of AI!

Accuracy Paradox

Or not. Getting such a result at first glance generally shows an issue. To check the problem, Virginie took a look on the confusion matrix. This is an array giving numbers of:

- false positives,

- true positives,

- false negatives,

- false positives,

It emerges from the confusion matrix that the network didn’t spot any wrong items. It got the incredible score of 99.1% because the production line had only 0.9% of defects. This is what is called the Accuracy Paradox. When a class is over-represented compared to the other ones, the network prefers classifying all inputs into this class. This way, it maximizes its success rate while minimizing its effort. Smart AI!

Preventing the effects of the accuracy paradox may be done in several ways, including:

- Penalizing the network when a wrong item picture is not spotted,

- Reducing gap between classes (kind of AI marxism), by increasing the number of bad product pictures,

- Resampling: change a little bit all testing pictures (flips, rotations, crops, etc.) to artificially increase the content of under-represented classes.

Even when flipping all available pictures, the neural network is still too accurate. Perhaps the evolution function has to be reviewed?

Evaluation Function Definition

Finding the correct evaluation function is tricky. For instance, let’s try to learn a robot to not collide against walls.

A first evaluation function may be maximize time before colliding with a wall. Well… A neural network is a hacker, thinking outside the rules. In this case, the robot just doesn’t move. He fulfills the requirement. But that’s not exactly what we want from it.

So, we need to add another constraint: maximize time before colliding by moving. Again, our hacker network makes the robot turn in a small circle. We still need to add another constraint.

Maximize time before colliding by moving forward. This time, the robot behaves correctly. It moves forward, and when detecting a wall, turns.

Virginie made a parallel I can confirm as a parent. Ask a child to eat with a fork. They will probably take the food with their hand, attach the food on the fork, and then put the fork in their mouth. Give rules to your neural network as you would with a child: “eat with your fork without using your hands”.

What I Thought

That was another great conference. With concrete examples, clear explanations between inputs and outputs, no lingo… I really love this kind of talk where you learn a lot without any technical obstacles.

Intelligence for Science: You Can Do a Lot!

YouTube might track you and we would rather have your consent before loading this video.

That was a more “commercial” talk promoting the CrowdAI website. Mohanty presented some examples of game learning challenges. His website regularly organizes deep learning competitions. Find the best neural network to make a skeleton walk, to create music, or even to play Doom…

The aim of this talk was to de-demonize machine learning. It has never been so accessible (his own words), thanks to a lot of existing frameworks such as anaconda.

What I Thought

Encouraging developers to do some machine learning is great. Even if I am not sure the audience was the perfect target. If we are at DotAI, that’s because we already want to do some AI. This talk would have better fit in a lightning talk slot rather than in a traditional one in my opinion.

Note that if you want to exercise machine learning, you can also give Kaggle a try.

Lightning Talks

There were several lightning talks. Here is in bulk what I have retained:

- Listen: grab all the technical terms you hear, even if you don’t understand them now. They may be useful in the future ;

- Use several pre-existing architectures for your task, and test which one works best ;

- Test as soon as possible on full real data ;

- Kafka is a queuing system often used in machine learning.

What I Thought

When I read “lightning talks”, I expect commercial talks from sponsors, as already experienced in other conferences. Not this time. Even if I wasn’t interested in all the topics, I was glad to see real topics, and not “we are hiring” presentations. Good point then!

Building trust through Explainable AI

Slideshare might track you and we would rather have your consent before loading this.

Peet Denny explains the difference between accuracy and explainability. Generally, the most accurate a machine learning algorithm is, the less explainable it is.

Linear regression is the most explainable form. It produces always the same output for a given input. This explainable algorithm is useful in the medical field. A doctor won’t accept to give a patient a random treatment based on some obscure machine predictions.

After these generalities, I must admit that Peet lost me. Hence, I won’t be able to cover the rest of his presentation. Yet, you still have the slides above.

What I Thought

Not a lot. Either this talk was too technical, or I was too distracted to follow this one.

AI Becomes Distributed

Slideshare might track you and we would rather have your consent before loading this.

Jim Dowling talked about distributed AI systems. AI handles more and more complex problems. We need to handle increasing sizes of training data, more complex models, and so on. As a consequence, we need more computation power. That’s why AI is now (and should be) distributed. For instance, between 2012 and 2018, thanks to distribution, the computation power has been multiplied by 300,000!

There are several ways to parallelize. We can either parallelize models between GPUs (each GPU being a layer of the network) or parallelize data. In the latter case, the training data is split into several chunks. Each chunk is then sent to a different GPU to train the network. For correct performances, we need a distributed file system as well, such as Hadoop Distributed File System (HDFS).

Handling such distributed file system is really complex for developers. This is the main reason why neural networks are often monolithic, as it requires a lot of effort to distribute them. This reason pushed Google to develop HOPS. HOPS is an easy to access distributed filesystem. And, the icing on the cake, it outperforms HDFS.

What I Thought

I never played with distributed file systems, either in Python or in other languages. Yet, watching at the presented snippets, it looks quite straightforward. And thinking about all the benefits distribution can bring, especially the faster training, I would probably try the network distribution early in my data scientist learning process.

About Organization

A few words about the organization to finish this post. No real issue here, but hey, positive feedback is still feedback, isn’t it?

The location was great: close to the center of Paris, big room, nice scene background… Another good point: the air-conditionning. It seems like a detail, but I guarantee you are far more attentive to the speaker when you don’t dream of rushing to the nearest booth for a fresh glass of water.

The duration may seem like an issue at first glance. A half a day event is rare nowadays. However, I prefer to keep such an event short and remove all long and boring talks. And it was the case. Except for a very technical talk I didn’t achieve to follow, all were really enjoyable.

Talk duration was also a great positive point. As I regularly mention when speaking about giving better talks, too long conferences are great for organization, not for people. Focus lasts about 20 minutes. And, that’s exactly the time of each talk!

In addition to this ideal rhythm, there were several breaks of 30 minutes. That’s the most important part of any conference: this is the moment where you can discuss with other unknown developers to share your technical (but not only) experience. Networking is at least as important as talks. And organizers understand that really well!

Breaks are also the time to get some snacks. Have you ever attended an event where you can grab some food without wasting some time in a queue? I don’t. A nice distribution of all the booths and a fast execution in service… That was the best experience of all the conferences I attended.

Great job for the organization! Dot conference is not on their first try, and this is noticeable. GG to the whole Dot Team!

Authors

Full-stack web developer at marmelab - Node.js, React, Angular, Symfony, Go, Arduino, Docker. Can fit a huge number of puns into a single sentence.