vibe-spec: Generate Specifications From Coding Agent Logs

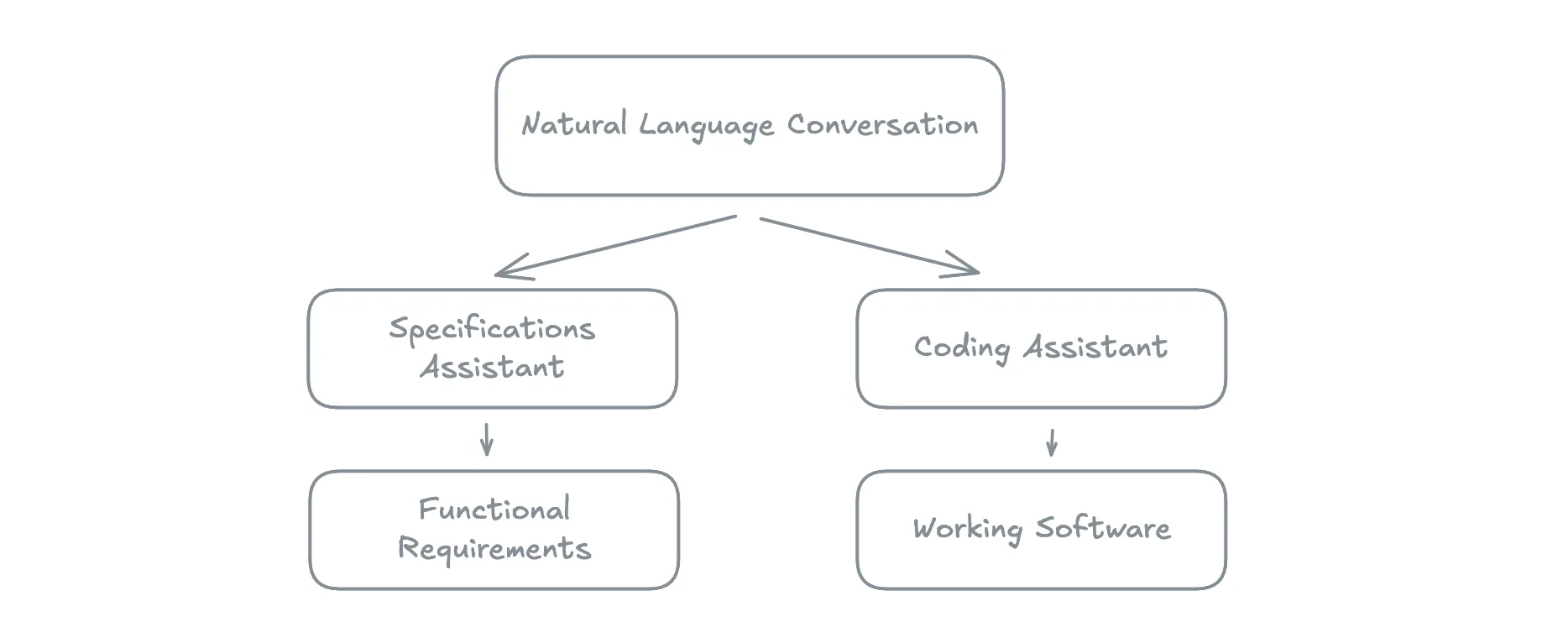

With AI coding assistants, natural language developers can build software iteratively. But without specifications or user stories, projects lack functional definitions. This creates a knowledge gap that degrades software quality over time. Fortunately, the problem statements are already in the conversation logs—we just need to extract them.

Building Software with AI Coding Assistants

AI Coding Assistants are extremely effective at developing some form of software in semi-autonomy. I have experienced that firsthand, with sessions of vibe coding (I prefer the term “Natural Language Development”) that produce working software without ever writing a single line of code. I do look at the code though, and I often ask the assistant to refactor it one way or another, but I only use plain English to describe what I want.

One example is a 3D sculpting tool with adaptive mesh that I built for the web, inspired by ZBrush. It allows users to add or remove matter from the currently selected shape, supports symmetry axes, and most of what you expect from a 3D modeling tool. Here is a demo of this tool in action:

You can also test it online and see the code.

I developed this tool entirely via Claude Code, using instructions like the following:

[14:06] It works. But I want to modify the mouse interactions. Left click should add matter, and middle click should be used to move the camera

[14:08] Now, I want the “add matter” action to be more realistic. For that, you’ll have to consider that clicking on an object is like adding more matter to the surface of the object, updating its geometry instead of rendering another sphere. I really want a sculpting tool.

[14:11] I want that when I keep the left mouse button down, it keeps adding more matter

[14:14] Now I want a toolbar that lets me add primitive shapes (sphere, cube, cylinder, etc) as well as selecting the “add matter” tool. The app should start with an empty scene. The user can add a.g. a sphere, then select the “add matter” tool, and start sculpting that sphere.

[14:19] the sculpt tool no longer works on the objects I’ve added

[14:20] No, it still doesn’t work, clicking on a sphere I just added doesn’t deform it

[14:36] When adding matter, the existing vertices grow, and after some time the sculpting tool doesn’t work because I’m modifying large triangles, i.e. planes without inner mesh. I think we should fix this by adding a dynamic mesh subdivision system: when the user clicks on a mesh to sculpt it, if the geometry of that spot isn’t fine enough, subdivide it to let the user add more details.

[15:20] there seems to be a problem with the subdivision and with the sculpt tool. Let me explain the problem. First, when I use the sculpt tool, the mesh sometimes shows holes. This means the subdivision creates an incorrect geometry, and some divided triangles are along non-divided triangles. Second, the sculpt button doesn’t seem to alter the existing mesh in the right way. It should move vertices in the direction of the average normal of all the triangles in the location of the tool. By the way, I’d like a preview of the sculpt tool size, that shows the affected region.

You can see the full conversation log (including lots of typos and the agent replies) in the project repository: claude_code_logs.md.

The Problem of Missing Functional Definitions

As you can see, I built the software in an iterative way, adding features or modifying them little by little. I’m heavily influenced by Lean Startup and Agile software development, where you skip the initial specifications phase. I find that Natural Language Development works particularly well for that coding workflow. It even allows me to skip writing user stories altogether: I simply describe one requirement to the assistant with minimal details, let it build an initial implementation, then refine from there.

This approach has one major drawback, though: as there is no initial specification and no user stories, the application has no functional definition whatsoever. Newcomers joining such a project will see the outcome of the development process, but never the intent or the initial problem. If the software behaves in a strange way, there is no way to determine if it’s a bug or a feature. It’s as if I handed the source code of Microsoft Word to a developer without ever introducing them to the concepts of word processors. That’s a recipe for disaster.

So, Natural Language Development produces a gap in domain knowledge. This gap degrades the quality of the software as more and more features are added, and more and more knowledge is lost.

Generating Specifications from Conversation Logs

Fortunately, the problem statements are contained in the conversation logs. Look again at how I introduced the concept of adaptive subdivision:

When adding matter, the existing vertices grow, and after some time the sculpting tool doesn’t work because I’m modifying large triangles, i.e. planes without inner mesh. I think we should fix this by adding a dynamic mesh subdivision system: when the user clicks on a mesh to sculpt it, if the geometry of that spot isn’t fine enough, subdivide it to let the user add more details.

It’s all there! So we can use the coding agents’ conversation logs as a source for functional specifications. And instead of doing that ourselves, we can ask an LLM to do it for us.

That’s the idea behind marmelab/vibe-spec, a CLI tool I built and open-sourced this week.

Run it inside a project directory, and it will gather the Claude Code logs for that project and send them to the OpenAI API with instructions for building a set of functional requirements.

$ vibe-spec specThe result is a Markdown document that looks like the following:

Sculpt Tools

Sculpt tools provide clay-like deformation capabilities with three primary operations: Add, Subtract, and Push.

Users can select these tools from the toolbar to modify the selected object interactively. With a sculpt tool active and an object selected, moving over the mesh reveals a circular brush preview indicating the affected area. Pressing and holding the pointer down on the mesh begins operation, raycasting to determine hit position and triangle. Add/Subtract displace vertices along an averaged local normal; Push displaces in world-space drag direction. Brush parameters include Brush Size Strength. Users can adjust these via keyboard shortcuts (+/- for size, Shift+ +/- for strength) or UI controls.

Business/validation rules:

- Sculpting must be continuous and localized;

- Sculpting operations automatically subdivide the mesh for detail;

- Affected vertices are limited to brush radius and adjacency rings;

- Per-frame displacement is clamped to prevent inverted normals or self-intersection;

- Symmetry options allow mirroring across X, Y, Z axes.

- Sculpt tools don’t cause tearing or mesh artifacts.

Mobile UI adjustments: An optional modal dialog provides compact controls for brushSize, brushStrength and symmetry toggles. This dialog is optional and defaults to collapsed on tool selection to keep the canvas clear.

The same source (the conversation with a software agent) serves both to build the software and to document it.

Does It Work?

I’ve tested vibe-spec on various projects that I vibe-coded, and the results are promising. The generated spec is better when I initially described the underlying problem to give some context to the assistant, instead of just directing it to implement the solution I’ve imagined. I’ve also noticed that Claude Code develops better when I share the initial problem, so it’s a good practice anyway.

I tend to commit the generated spec with the code. It’s easy to find, and the coding assistant can use this resource to better understand existing features. As such, it’s a fundamental part of context engineering.

Ideally, the spec should be updated at the same time as the code. I tried adding this practice to the coding agent instructions, but I realized I spent too much time reviewing spec edits that were reverted a few minutes later (as a consequence of the iterative development process). I prefer updating the spec at the end of Natural Language Development sessions that can span several hours or days, and using a CLI feels more adapted.

This process could be automated via a skill and/or an MCP server, but for now, I didn’t need it.

Conclusion

vibe-spec is like my personal scribe: it turns informal discussions into a well-structured and purpose-driven specification.

This tool allows for longer Natural Language Development sessions, spanning more than a few hours without losing quality, and without falling back to a spec-first approach.

It opens the door to new development workflows where specifications are generated continuously from the coding process itself, rather than being a prerequisite.

marmelab/vibe-spec is open-source. If you find it useful, please send me your feedback and don’t hesitate to contribute!

Authors

Marmelab founder and CEO, passionate about web technologies, agile, sustainability, leadership, and open-source. Lead developer of react-admin, founder of GreenFrame.io, and regular speaker at tech conferences.