AI's Environmental Impact: Making an Informed Choice

Modern AI tools offer impressive benefits but come with environmental costs. Large Language Models (LLMs) consume significant energy during training and inference, leading to substantial CO2 emissions.

As someone deeply concerned about climate change, I wonder if using AI tools is the right choice. Let’s look at the facts.

Disclaimer: I tried to source all my claims, but I’m just a software engineer. I’m not a researcher, so I don’t have the authority to verify these sources. I’m just sharing the result of my analysis, and my personal opinion on the matter. If you have factual counterarguments, I’d love to hear them!

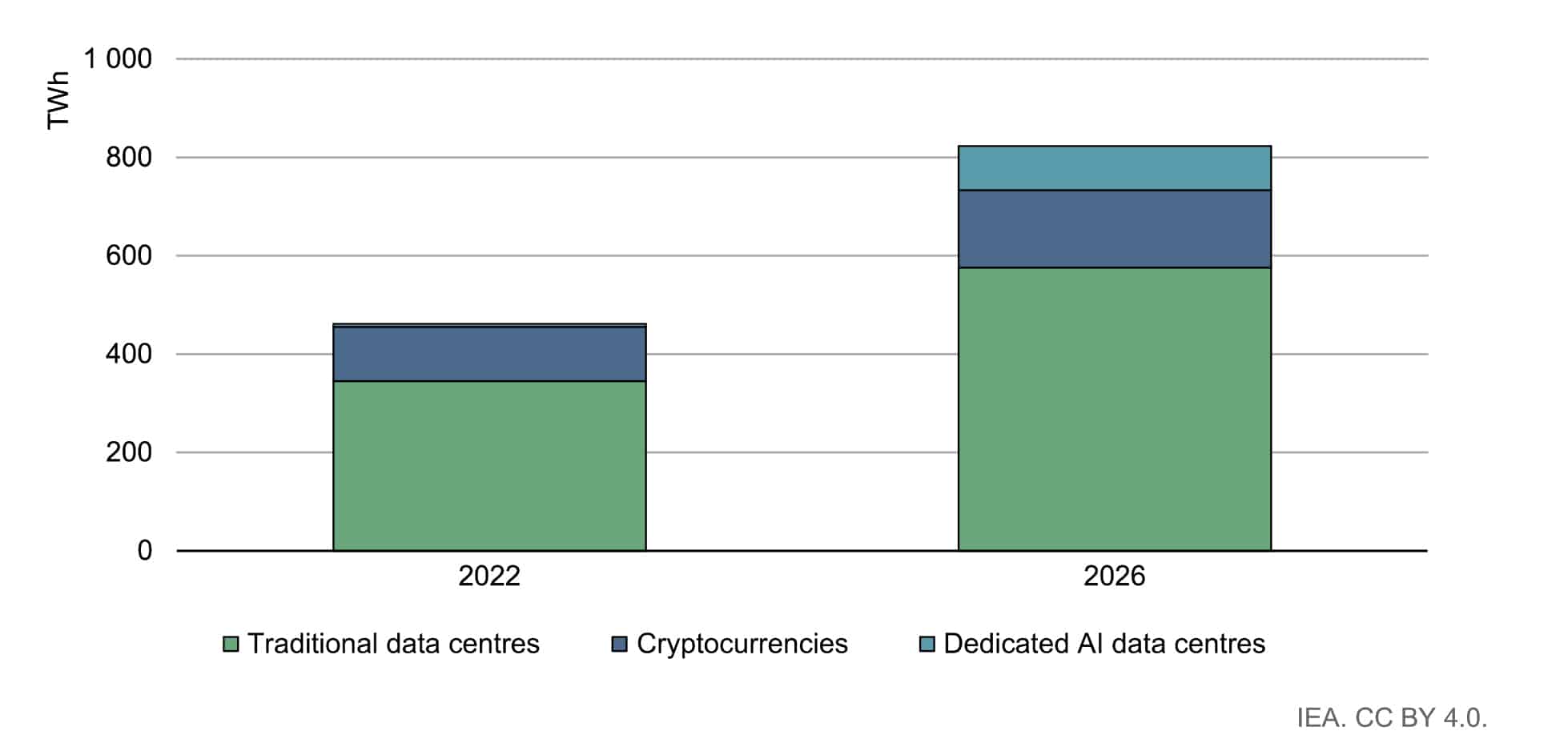

AI Does Consume A Lot Of Energy

Data centers around the globe consume a lot of energy, and AI is a big part of that. A July 2024 estimate by IDC suggests that AI data center energy consumption was 23 Terawatt hours (TWh) in 2022. That’s a lot of watt-hours! But it’s also hard to reason about.

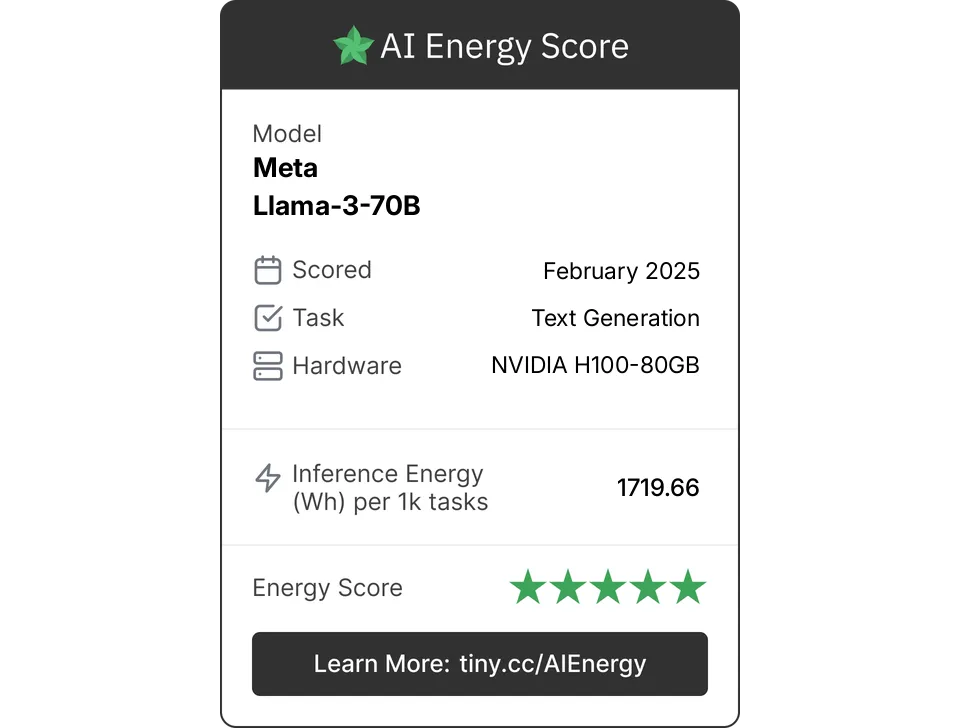

Computer scientists currently struggle to settle on a standardized way of measuring the emissions of AI. However, with access to open-source LLMs, it’s possible to measure the energy consumption on predefined workloads. That’s what the volunteers behind the AI Energy Score Benchmark did, and they recently published their first results.

These results, of course, depend on the model size. The biggest open-source models tested have around 70 billion parameters and consume 30,000 times more energy than gpt2, which fits in a single consumer GPU. The largest text generation model (Llama-3-70B from Meta) consumes 1.7Wh on average per query.

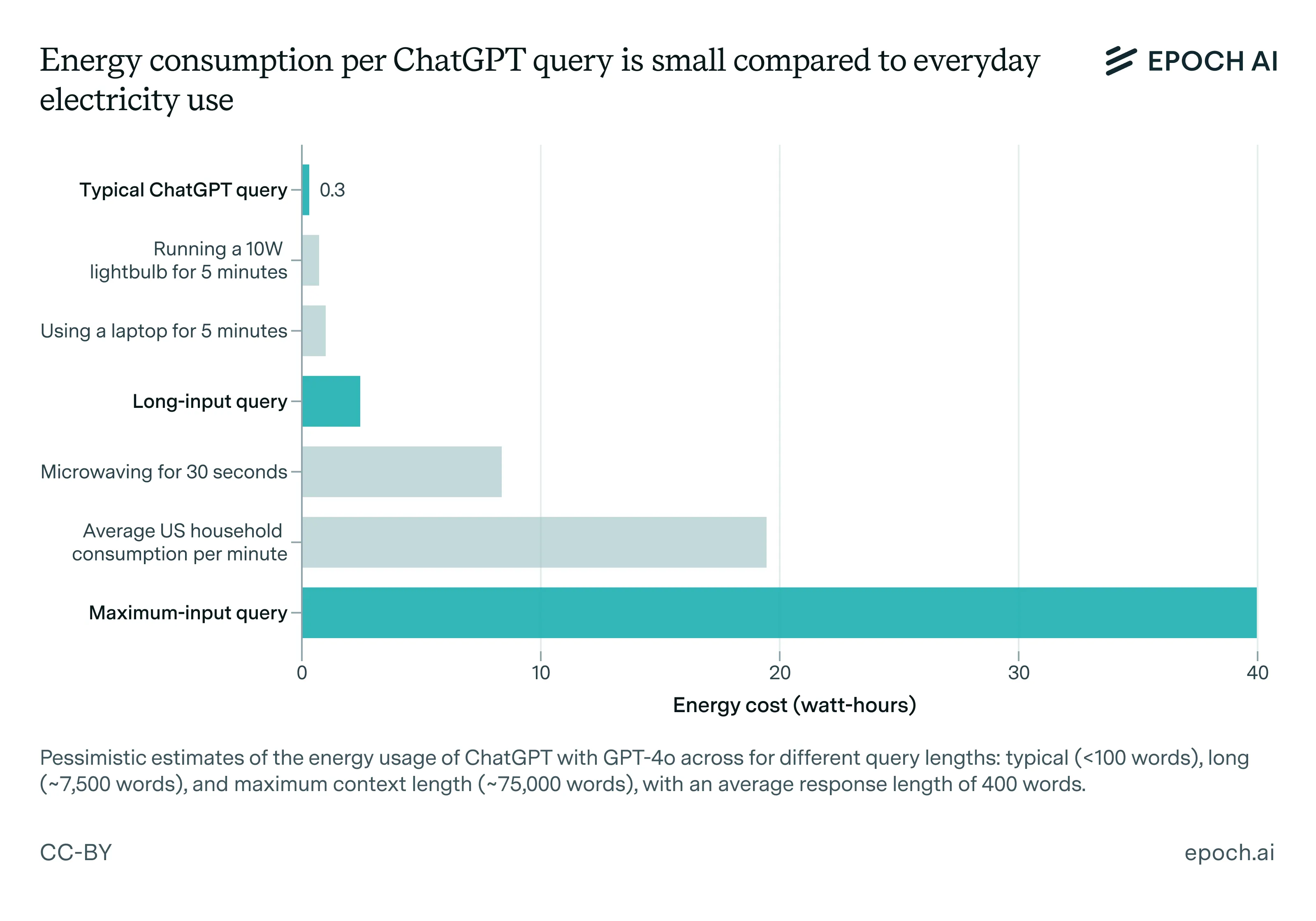

As for closed-source models like ChatGPT, Gemini, or Grok, the very number of parameters of these models is a trade secret, and the energy consumption is not public. Alex de Vries’ widely cited paper estimates about 3 Wh per GPT-3 query. However, a more recent paper by epoch.ai suggests that GPT-4, OpenAI’s flagship model, consumes about 0.3 Wh per query, ten times less.

This estimate of 0.3 Wh for GPT-4 seems very low when compared with the measurement of 1.7Wh for the much smaller model Llama-3-70B. However, this figure is plausible. It takes into account the improved efficiency of modern chips, the fact that ChatGPT probably activates only a fraction of its parameters for each query, and the assumption about the typical query length.

This estimate only concerns queries, or inference. Training large models is also very computer-intensive. An academic estimate for the training of GPT-3 gives an alarming 1.287 gigawatt-hours. Google analysts estimate that training accounts for 40% of the energy used by their generative AI while the other 60% came from running queries. And the power required to train frontier AI models is doubling annually:

A conservative assumption may suggest that training accounts for 50% of the overall energy usage of AI. In other words, we need to double the inference energy consumption to get a rough estimate of the total energy consumption of AI. With our estimate of 0.3 Wh per query, this gives 0.6 Wh per query.

Again, this is one estimate, depending on some assumptions. But it gives a rough idea of the order of magnitude.

So far, we’ve only talked about the energy consumption of AI. But manufacturing the hardware that runs AI also has a huge environmental cost. How much of that initial footprint can be included in usage depends on how long the mega GPU clusters will be used, and we don’t have a long enough history to know that.

Note: For more figures and sources, check out this great article by Greenpeace: ChatCO2 – Safeguards Needed For AI’s Climate Risks.

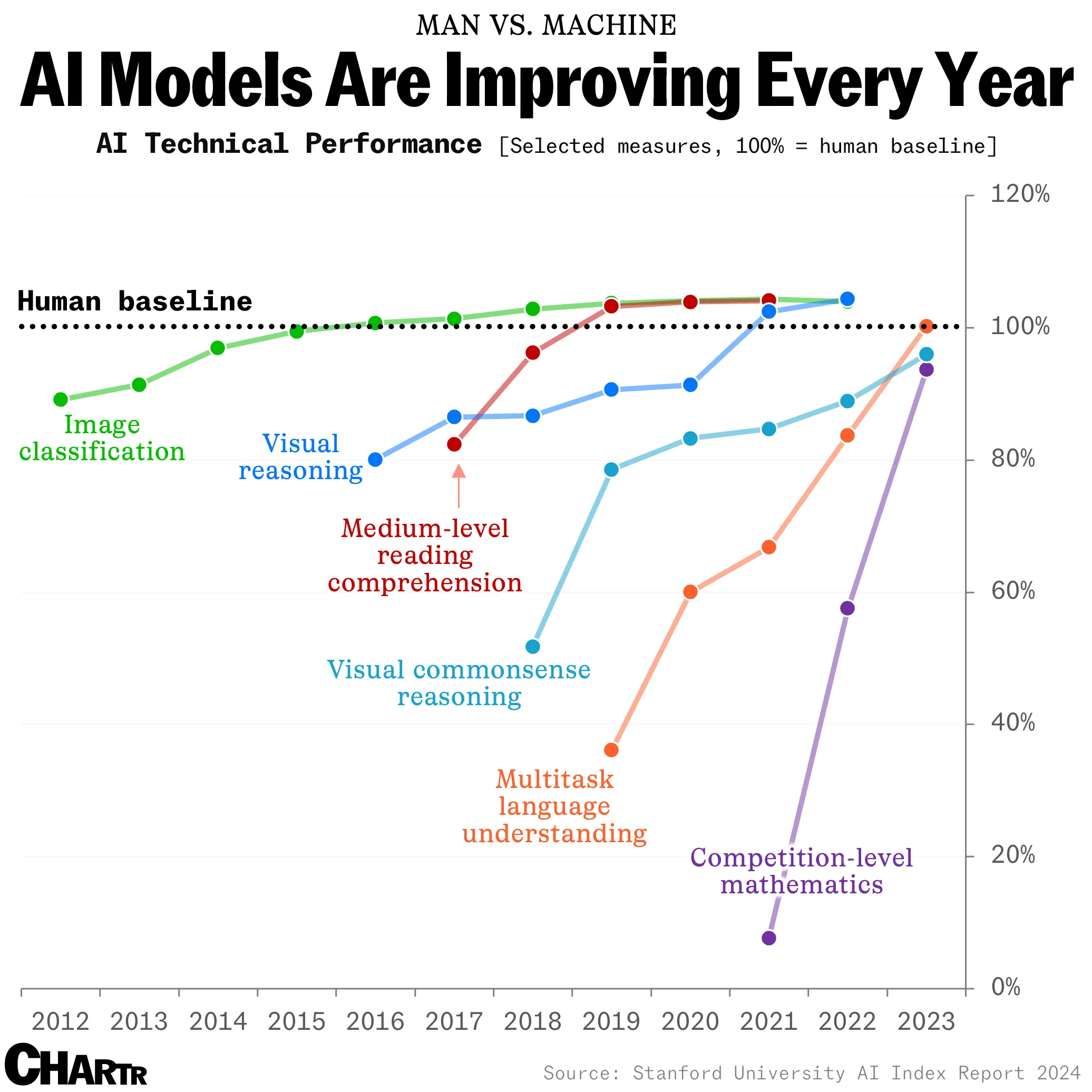

AI Efficiency is Progressing

These figures are alarming, but they actually show impressive progress. LLMs used to consume much more energy in past years. The progress in AI efficiency comes from both software and hardware innovations.

Thanks to knowledge distillation, model pruning, mixture-of-experts (MOE), quantization and other compression techniques, smaller models reach the same performance as larger models, with a fraction of the energy consumption. For example, LLama3.3-70B, with 70 billion parameters, outperforms GPT-4-Turbo, with an estimated 1.8 trillion parameters. Check the benchmark on LLMArena. That’s a 25x improvement in size in a few months.

New model architectures like Large Language Diffusion Models (LLaDA) show reduced training costs, and multi-head latent attention (MLA) shows reduced inference costs—for the same quality of responses. Micro-optimizations like using assembly rather than CUDA also make a big difference. One example of these advances is DeepSeek v3 (671 billion parameters), which required an estimated $5.5M for training and outperforms GPT-4, which cost around $100M to train and contains 3x more parameters.

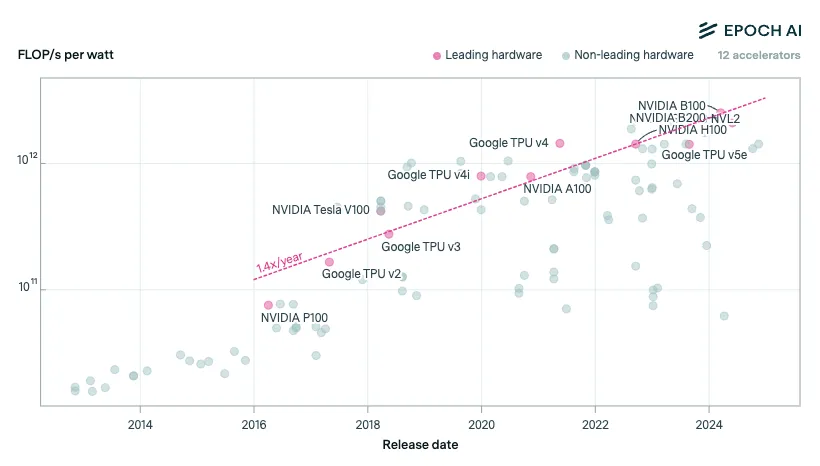

Advances in hardware also increase the performance per watt of GPU clusters. Leading ML hardware becomes 40% more energy-efficient each year:

With recent hardware, both training and inference processes are more efficient.

All this leads some data scientists to predict that the carbon footprint of machine learning training will plateau, then shrink. Not everyone agrees: other studies write that the current trend of compute scaling can continue until 2030 despite key bottlenecks in scaling up compute for AI pre-training (power infrastructure, GPU production, data availability, and latency). But this last study focuses on “frontier models,” the most expensive models to train, and not necessarily the most used ones.

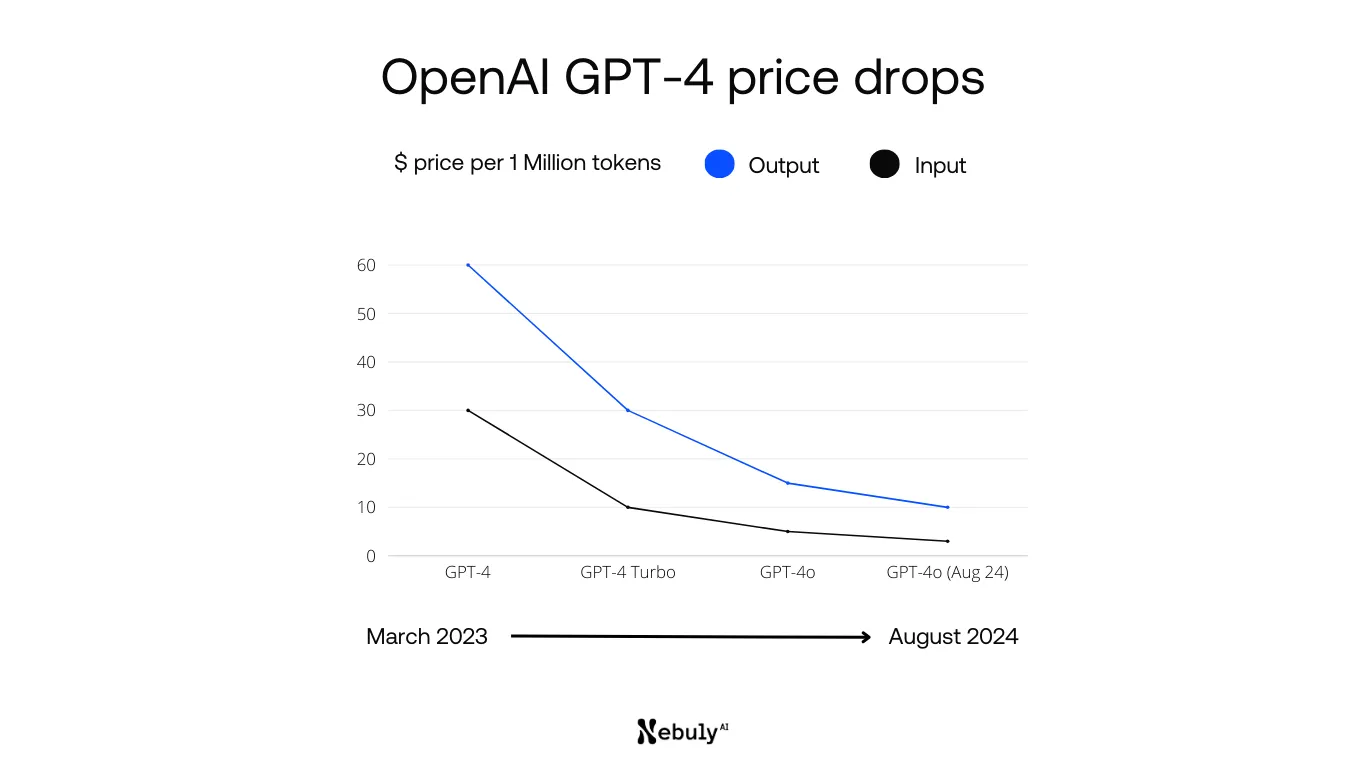

As for inference, the computational cost is also decreasing. The price of OpenAI’s API queries for the GPT-4 family of models has steadily decreased in the past 2 years. This suggests that the energy consumption of the models has decreased as well—even though we know that OpenAI is losing billions every year.

In summary, two significant trends are emerging:

- Smaller and more energy-efficient models that perform very well.

- Larger, more power-hungry models with diminishing returns.

Ironically, most of the progress in AI energy efficiency comes from China, where energy and GPUs are scarce. Companies based in the US, where innovation is fueled by speculation, seem more interested in adding more and more parameters to their models.

Does AI Emit That Much?

Let’s put the energy consumption of AI into perspective.

I wrote above that a recent estimate of ChatGPT power usage is 0.3 Wh per query. This is in line with other online services. In fact, a standard Google search reportedly uses 0.3 Wh of electricity. It’s more than the consumption of a static webpage, but less than the consumption of a video on YouTube.

Besides, data centers’ energy typically represents less than 15% of the overall carbon footprint when you use a web service. The rest is the network, the user’s device, and the production of the equipment.

Another interesting perspective is that 0.3Wh is less energy than powering a 10W lightbulb for 5 minutes:

In fact, when reported on a 10W lightbulb, a ChatGPT query corresponds to 1.8 minutes of use, about the same as the average duration of a ChatGPT session. To summarize, if you use ChatGPT, it consumes about as much energy as a lightbulb.

If you worry about the environmental impact of your online actions, you should focus on reducing your screen time and turning off your set-top box when you’re not using it before thinking about selecting web services based on their energy consumption.

Using AI May Be a Net Gain

It’s a good thing that we worry about the environmental impact of our online actions. While we’re at it, let’s look beyond AI.

In my experience, asking a question to ChatGPT saves a few Google searches (not accounting for browsing the top search results, which are often not relevant). I’ve already mentioned that a Google Search and a ChatGPT query consume the same. So in that case, using AI would be a net gain.

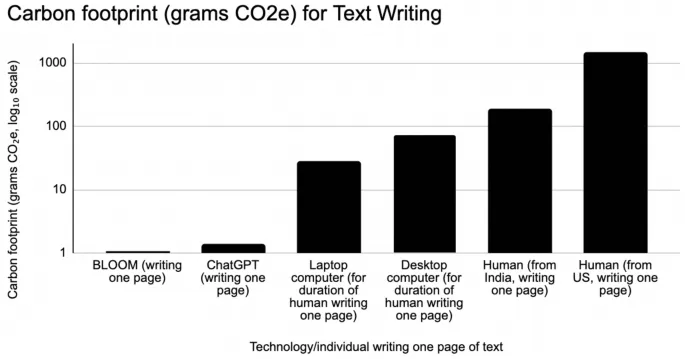

This may be true for other activities, too. A Nature paper demonstrated that the carbon emissions of writing and illustrating are lower for AI than for humans.

These days, AI is used to save energy in many fields. Here are a few examples:

- Google leveraged DeepMind’s neural networks to dynamically optimize data center cooling, cutting the energy used for cooling by up to 40% and achieving a 15% reduction in overall PUE (Power Usage Effectiveness).

- Netflix uses AI-powered content-aware encoding to save roughly 20% bandwidth on average without sacrificing quality.

- California used AI forecasts plus optimized battery storage to cut solar energy curtailment by 18%.

- In the industry, AI-driven predictive maintenance typically saves 8-12% in maintenance costs.

And beyond energy, LLMs and AI already proved that they can help us tremendously in countless domains, from translating languages to diagnosing diseases. Using AI for these tasks reduces the time and energy we spend.

To determine if the net environmental impact of AI on a process, we need to do a life-cycle assessment, a methodology that takes everything into account, from manufacturing to disposal. And we need to do it twice: once without AI, and once with AI. The difference between the two assessments will tell us if AI is a net gain or a net loss. I haven’t found many such studies, by my gut feeling makes me think that the conclusion depends on the use case.

I received direct feedback about a company using LLMs to draft legal contracts based on term sheets. It takes the LLM 15 minutes to produce a draft equivalent to what a junior lawyer would produce in 4 days. Even if the LLM consumes 10x more energy than the lightbulb estimate, this is still a net gain in energy consumption and carbon emissions.

AI Is Just Like Any Other Digital Service

Using AI could be good for the environment? This sounds awful like Microsoft’s Carbon negative datacenters and other forms of greenwashing. How is it possible that using overly complex models that consume a lot of energy could be a net gain?

The latest tech news show that AI is currently causing an increase in greenhouse gas (GHG) emissions. Google’s GHG emissions increased 13% in 2023 and Microsoft GHG emissions increased by 29.1% between 2020 and 2024. Both blamed the increase on AI, which made them reconsider their GHG emissions projections. And academic studies conclude that Carbon neutrality is mission impossible for AI.

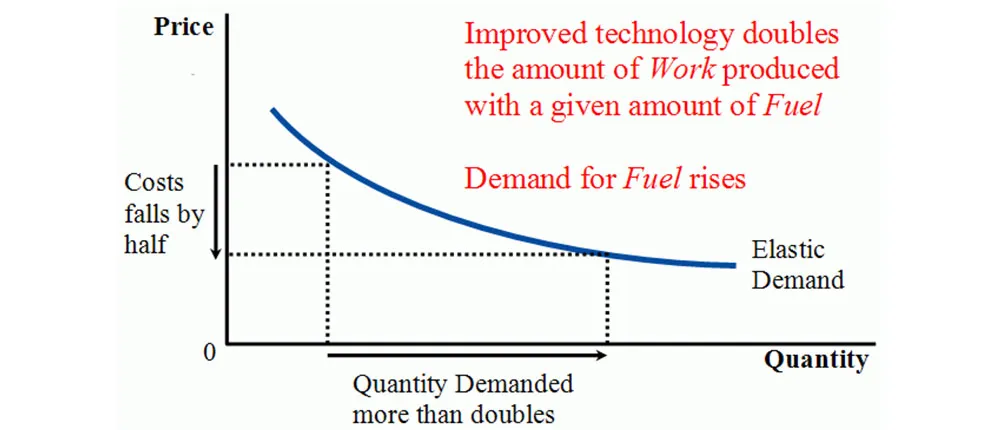

Why is this happening if AI can replace more carbon-intensive processes? My opinion is that this is caused by the Jevons Paradox, a particular case of the rebound effect:

In economics, the Jevons paradox occurs when technological advancements make a resource more efficient to use (thereby reducing the amount needed for a single application); however, as the cost of using the resource drops, if the price is highly elastic, this results in overall demand increases causing total resource consumption to rise.

The efficiency of LLMs is increasing the overall demand for AI, and accelerating the digitalization of the economy. LLMs allow to automate tasks that were previously done by humans, and to create new tasks that were previously impossible. This results in a productivity boost. Just like the industrial revolution of the 19th century, and the information revolution of the 90s.

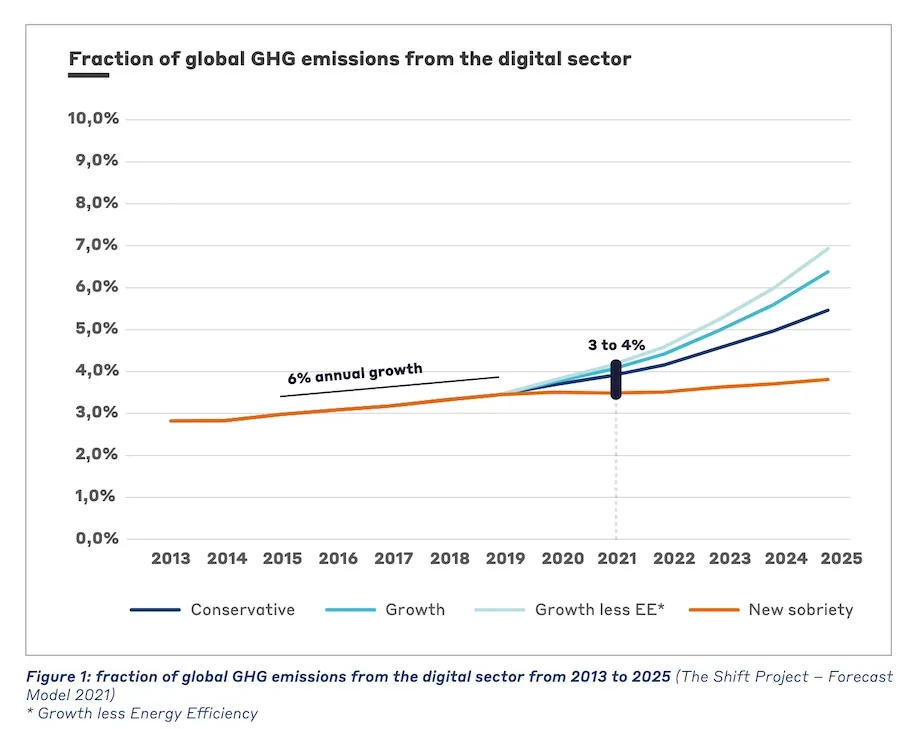

To put it differently, the increase in GHG emissions due to AI is not a new trend: it’s the continuation of the digitalization of the economy. If I worry about the environmental impact of AI, I should also monitor my consumption of digital services in general. By the way, that’s exactly what my company did: they built GreenFrame, a tool to measure the environmental impact of digital services.

Conclusion

Instead of blaming the tech, we should maybe blame our usages. Do we need to ask ChatGPT about the best TV series of 2024, instead of subscribing to a human-curated magazine? If AI is causing a boost in productivity, why don’t we take advantage of it to work less, and consume less? I find that the arrival of AI forces us to ask important questions that we should have asked a long time ago.

Also, we have bigger fish to fry. As a reminder, ICTs only account for 4%-5% of the overall GHG emissions. If we’re concerned about climate change, why don’t we change the way we eat, the way we travel, the way we heat our homes, before asking for an AI boycott?

By the way, it works: regulations and digitalization have already allowed some western countries, including my home country France, to reduce their GHG emissions (even when accounting for consumption-based emissions):

To summarize my opinion, I think that the fears around the impact of AI on climate change are overblown but useful: they help us remember that our actions have a huge environmental impact, and that we should act carefully and responsibly.

AI is definitely changing the world. As a software engineer working for a digital innovation company, I think it is my role to help non-tech people use AI the right way. So I’ll continue to learn and use AI, and to share my knowledge with my colleagues and friends.

If you have a different opinion, I’d love to hear it! Use the comment section below, or reach out to me on BlueSky.

N.B.: It took me about two days to write this article. Once I finished, I tried using ChatGPT’s Deep Research to see how long it would take it to write a similar article. It took only 7 minutes, reading 27 sources. I was impressed… but not so impressed once I read the actual result, which was giving the same weight to unknown sources and to academic papers. Also, its conclusion was not conclusive. I think humans still have a lead on AI in terms of writing quality ;)

Authors

Marmelab founder and CEO, passionate about web technologies, agile, sustainability, leadership, and open-source. Lead developer of react-admin, founder of GreenFrame.io, and regular speaker at tech conferences.